6 Of The Worst Mistakes Google's New AI Overview Has Made So Far

In May 2024, Google held its latest Google I/O conference, which opened with a keynote speech that focused heavily on the developments related to the company's AI model, Gemini. One of the tools highlighted was AI Overview for Google Search, which launched immediately. Basically, AI Overview seems intended as a cross between Microsoft's Copilot — generative AI that tries to be diligent about summarizing linked citations — and the existing info box that already excerpts information from a top search result. At the conference, some of the more esoteric related AI tools, like being able to search using a user-generated video, got the most attention. In the days that followed, though, with AI Overview immediately available, it got the lion's share of the attention, and it wasn't for good reasons.

It quickly became very clear that AI Overview was not ready for prime time, with the AI routinely hallucinating sometimes dangerous answers. The bad answers ran the gamut, from inaccurate political information that fuels conspiracy theories to dangerous health advice. It routinely read obvious jokes as legitimate factual information, and, for some reason, relied far too heavily on random Reddit comments. "The examples we've seen are generally very uncommon queries and aren't representative of most people's experiences," a Google spokesperson told ArsTechnica. "The vast majority of AI Overviews provide high quality information, with links to dig deeper on the web." That sounds great in theory, but in practice it's become yet another AI horror story. Let's look at some of the worst examples so far.

Suggesting using glue to prevent cheese from sliding off pizza

One of the most infamous creations of Google's AI Overview so far is a case where it mined social media for the search summary and could not discern humor from genuine advice. Having first gone viral via a May 2024 post by @heavenrend on X, the app formerly known as Twitter, AI Overview took a query for "cheese not sticking to pizza" and fed out a summary that suggested using non-toxic glue to keep the cheese from sliding. The source? An obvious joke comment on Reddit from 2013.

"To get the cheese to stick I recommend mixing about 1/8 cup of Elmer's glue in with the sauce," reads the comment. "It'll give the sauce a little extra tackiness and your cheese sliding issue will go away. It'll also add a little unique flavor. I like Elmer's school glue, but any glue will work as long as it's non-toxic." Google has a licensing deal with Reddit for AI training, so it should come as no surprise that Reddit comments are being mined for an advice-style search like this. The problem comes in Gemini's complete inability to discern context.

Meanwhile, at Business Insider, veteran tech reporter Katie Notopoulos decided to throw caution to the wind and see how the joke recipe would actually turn out. After admitting that she ate paste as a child, Notopoulos said that the pizza wasn't that bad, and the cheese didn't slide! So ... yay?

Boosting racist conspiracy theories about Barack Obama

AI Overview has also gleaned that Barack Obama, a Christian, was the first Muslim President of the United States. This was called out by a few different X posters, including a former Obama staffer. The idea that Obama is a Muslim — something that should not be a problem regardless — has been used as a smear against him going back to his original campaign for President in 2008.

"This is obviously a systematic political strategy by somebody because these e-mails don't just keep coming out the way they have without somebody being behind it," explained Obama in a January 2008 Christian Broadcasting Network interview. "I want to make sure that your viewers understand that I am a Christian who has belonged to the same church for almost 20 years now. It's where Michelle and I got married. It's where our kids were dedicated. I took my oath of office on my family Bible."

Where the AI mishap gets particularly strange is that it wasn't pulling from racist conspiracy theory blogs. Instead, based on the listed citations, it decided Obama was a Muslim based on two incredibly unlikely sources. Those are the abstract of a chapter titled "Barack Hussein Obama: America's First Muslim President?" in a book from Oxford University Press, and a Pew Research article about the chosen religions of American Presidents, which doesn't even mention Islam. The latter notes that Obama is not a member of any specific Christian denomination, which the AI seemingly took as confirmation he's a Muslim.

Giving terrible password security advice

Unlike other Google AI Overview issues that have received widespread attention, this is one that, as of the time of writing, is still live on Alphabet's search engine. In this example, the AI somehow pieces together the wrong details about how to come up with a secure but easy to remember password — giving bad advice that could easily compromise your security. After searching "how to remember your password" in Google, the suggestions include such terrible advice as "Use personal details."

"Try using your name, birthdays, or the names of friends and family," reads the tips that popped up. "You can also try using the last four digits of someone's phone number as their password." The linked sources help explain how this happened. Two are YouTube videos from self-professed memory — not cybersecurity — experts, including Jim Kwik, and Ron White of "Brain Games" fame. Both explicitly suggest using a friend or family member's phone number to help remember passwords.

That explains part of the response, but not all of it. Another cited source is a page about remembering forgotten passwords from password manager NordPass. This explicitly advises against using personal names for passwords, but gives advice about how to factor in personal information to remember older passwords. Once again, Gemini completely fails to understand context, misinterpreting the NordPass memory jogging guidance as a secure password recommendation.

Seriously endorsing The Onion's advice to eat rocks

This one takes missing the context around jokes to a new level. In theory, one would think that the Gemini AI, as flawed as it is, has some kind of way of discerning that The Onion is satire, not a legitimate news outlet. However, this appears not to be the case. Ben Collins, the former NBC News reporter turned Onion CEO, pointed out on X that, if you Googled "how many rocks should I eat each day," the AI Overview returned the text of a satirical Onion article about the benefits of eating rocks as serious advice.

Based on the listed citations, it looks like this happened in part because ResFrac, a legitimate fracking company, posted an excerpt from The Onion's article on their blog. It seems as if appearing on a website with some degree of geological credibility, and little added context, elevated the article into a legitimate source in the mind of the AI. "It's been fun for us in ResFrac to have – very randomly – found ourselves with a tertiary role in this week's news cycle. It's an interesting case study in the training of large language models – that they can be confused by satire," reads an update to the blog post. There aren't enough details about the other citations visible in Collins' screenshot to determine exactly how they factored in.

Giving the University of Wisconsin one heck of a pedigree

Another error, widely panned online, came in response to "how many presidents graduated from university of wisonsin," as shown in a screenshot posted by Threads user @egaal. Google's AI Overview advised that various American presidents had graduated from the University of Wisconsin, with John Adams particularly scholarly, having graduated an astonishing 21 times. Similar inaccuracies were given for other presidents, though none as prolifically as Adams.

The citation shows that Gemini completely misunderstood a page on the university's alumni association website that listed all known UW graduates who shared names with past American presidents. "It's a big deal when a U.S. president — sitting or otherwise — is on campus," reads the start of the blog post. "But did you know that 13 U.S. presidents attended UW–Madison, for a total of 59 degrees? Well, sort of ... Here are the graduation years of alumni with presidential names." The list shows a whopping 24 instances when a John Adams earned a degree from UW, over 21 different classes, by far the most prolific presidential name. This was followed by Andrew Johnson with 14. Again, Gemini just isn't good at understanding context.

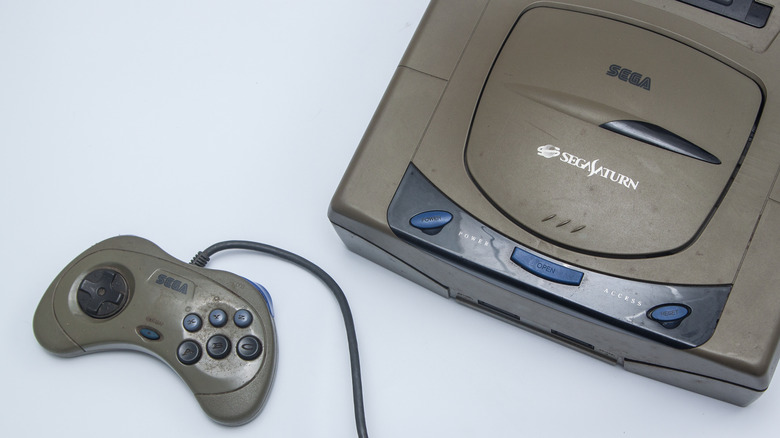

Being very confused by the fifth generation of video game consoles

In an example of giving inaccurate information about a topic that can easily be fact-checked — video game console release dates, ArsTechnica noticed in their roundup of AI Overview issues that they could replicate how Google answered a query with "Many video game consoles were sold in 1993, including the Atari Jaguar, Sega Saturn, and PlayStation." However, in reality, only the Atari Jaguar was released in 1993, with the Sega Saturn and PlayStation coming out the following year.

More interestingly, in the first of the two screenshots ArsTechnica published, the AI Overview says "The Nintendo 64 was the only fifth generation console to use cartridges, as well as the only one to offer 64-bit graphics." In the second screenshot shown it states that "The Atari Jaguar was released in November 1993, and was the first 64-bit video game console." Seemingly, Gemini is not only incorrect, but contradicting itself.