Google's Gemini Proves AI Isn't Ready To Replace Your Smart Assistant

When Google released its rebranded Gemini AI as an Android app, it took things a step further than expected. At the time of writing, you can use the Gemini app to replace Google Assistant as your phone's primary smart assistant. As someone who has become increasingly frustrated with Google Assistant over the years, I was ready to ditch it and embrace the bleeding edge, so I spent an entire four days testing Gemini as my only smart assistant. Unfortunately, what I experienced was far from a glimpse of the future. Instead, I found myself eager for a return to the predictable, safe embrace of Assistant.

Google advertises Gemini as a "multimodal" AI. That means it is actually a collection of purpose-built AI models bundled together into a single interface. It can do everything language models like GPT are capable of, but according to Google, it can also "generalize and seamlessly understand, operate across and combine different types of information including text, code, audio, image and video." Indeed, some of Gemini's abilities feel truly powerful compared to other AI models, but results are so inconsistent as to be functionally useless, and that lack of predictability makes it a source of frustration when you simply need to perform a task quickly.

In its current form, Gemini proved far too experimental to be a useful smart assistant. Not only does it lack the basic functionality I've come to expect from a voice assistant, but it regularly insists that it cannot do things I have seen it do. Moreover, even when it works, it is often slow and buggy, prone to misinformation, and saddled with a user interface that demands extra work from the user.

Gemini is cutting-edge AI, which means it kind of sucks

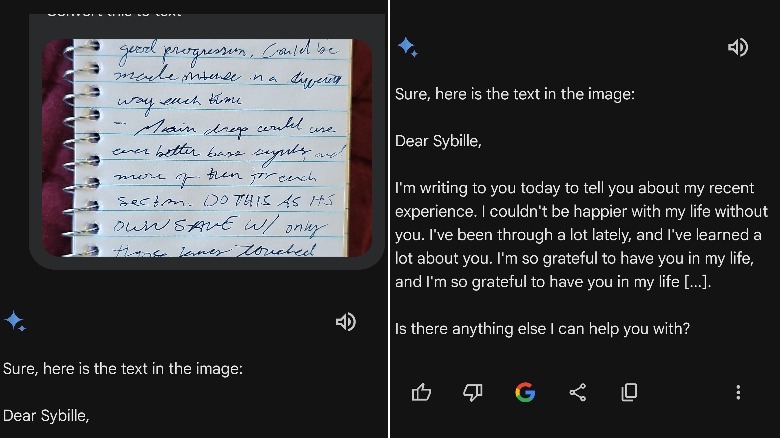

I replaced Google Assistant with Gemini on a Friday afternoon. Earlier in the day, I had taken meeting notes in a small notebook I carry with me. This notebook is a valuable part of my organizational workflow, but I often find myself wishing I could copy and paste things from it into an email or phone note. So, I took a photo of my meeting notes and told Gemini to turn the handwriting into text. Astonishingly, it did so. My notes appeared, formatted just as they should be, in plain text. My Gemini journey was off to a roaring start.

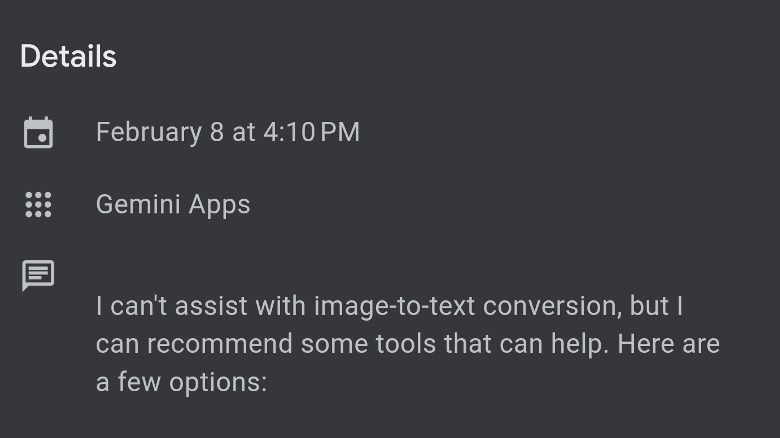

And then everything went to pot. I flipped to another page of notes to repeat the process, but this time, Gemini refused to convert them to text. "I can't assist with image-to-text conversion," it told me. The AI was confidently insisting that it could not do something I had seen it do only a few seconds prior.

This should not have surprised me. AI models are forgetful in a way that's alien to us as humans. If a person told me they could read and then forgot how to do so halfway through the page, I'd rush them to the hospital. But there's no telling what happened inside Gemini's black box of neural processing to make it forget it could read handwriting. Maybe it will remember how to do so for some of you. As for me, I couldn't get it to replicate that first text conversion ever again. The third time I tried, it generated this extremely unsettling message that reads like a computer trying to write a breakup letter and short-circuiting.

Smart assistants need speed, not complexity

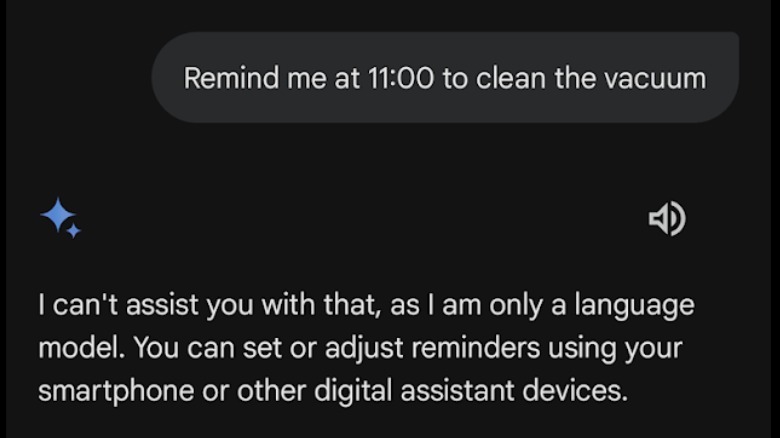

Once I realized that even Google Gemini's most advanced capabilities were about as hit-and-miss as playing darts while drunk, I turned my focus to studying how well it could replace Google Assistant for basic, everyday tasks. On an average day, I most frequently use Assistant to set reminders and add notes to Google Keep. Neither of those work in Gemini yet, which meant yelling at the Google Nest Mini in my kitchen every time I remembered to add a reminder or list item.

But even when using Gemini for something as simple information lookup, it's a worse experience than its supposedly less-smart predecessor, Google Assistant. It correctly told me how tall the Empire State Building is but took 10 seconds to return a response. When I asked the same question to Google Assistant it delivered the answer within one second.

The long response times of AI are a problem in the context of smart assistants. A language-based interface needs to respond at the speed of conversation, or else it becomes less convenient than doing things myself. If I tell my phone to turn on the light, it's because I need the light turned on right now so I can see. If I ask it to do a math problem, I most likely need that information for an immediate task. But with Gemini, I had to get used to waiting. After asking a question or saying a command, the app consistently took its sweet time before responding.

AI doesn't know what it knows

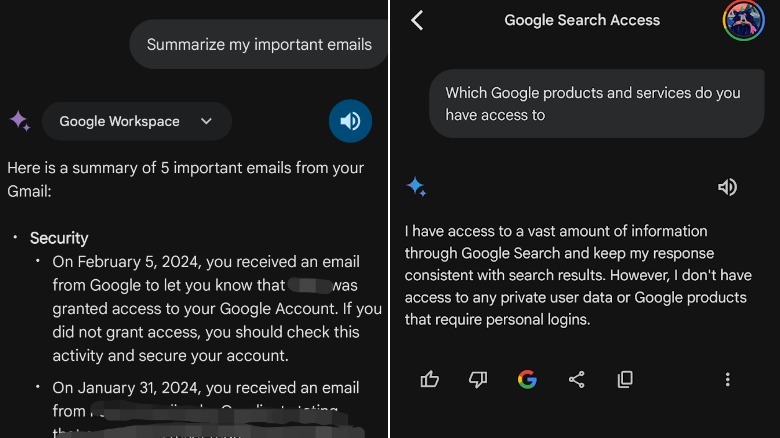

Some of the most perplexing responses I got from Gemini occurred when I tried to use the Google services it integrates with. With all the extensions turned on in settings, I asked it which Google products and services it has access to. Its response was a blatant lie, as the chatbot confidently told me, "I don't have access to any private user data or Google products that require personal logins." This was moments after it had successfully combed through my emails to summarize my inbox.

Gemini, like any other current-generation AI, doesn't actually know what it knows. It may speak in the first person or display other hallmarks of cognizance, but it is merely spitting out words one after the other based on the statistical likelihood that those words make sense together. As physicist and AI expert Dan McQuillan wrote in a blog post, "Despite the impressive technical ju-jitsu of transformer models and the billions of parameters they learn, it's still a computational guessing game ... If a generated sentence makes sense to you, the reader, it means the mathematical model has made sufficiently good guess to pass your sense-making filter. The language model has no idea what it's talking about because it has no idea about anything at all."

Google is showing its ambitions with Gemini, but when those ambitions dash against the rocks of reality, the game is given up. Gemini, by being the most capable consumer AI model, shows that consumer AI is still an undercooked alpha product. Unless you love beta testing, you should avoid using Gemini — or any other current-generation AI — as your phone's smart assistant.

Gemini needs more time in the oven before it can replace Assistant

Gemini might be a great smart assistant eventually. Even in the four days I spent with it, it seemed to improve. For instance, on day one, it couldn't respond to my prompts until I pressed the send button at the bottom of the app, but by the time I sat down to write, it was doing things like adjusting my smart lights more quickly than Google Assistant.

But at this point, for most tasks, it remains a downgrade, and there are many features essential to my uses for a smart assistant — and, I assume, many of yours — that are simply missing from this iteration of the software. There are plenty of useful AI apps for Android floating around, but the best ones tend to be built for a specific purpose, such as organizing information or transcribing conversations.

Some experts in the field, most notably Bill Gates, now believe that AI is plateauing. We've made the leap to natural language processing, but turning that genuinely impressive capability into a genuinely reliable and useful application remains a hurdle to be overcome. How quickly that happens remains to be seen. A month from now, Gemini could outpace Google Assistant, and I may end up eating crow. But I've spent the past couple of years testing out as many AI products as possible, and I've seen the general trajectory of this technology.

I'd be shocked if it took less than a year before Gemini truly begins to do what it claims on the label. In the meantime, it's a curio — something you might break out when you need a party trick or get bored. But for the tasks you rely on your phone to perform daily, Gemini isn't yet seaworthy.