Everything To Know About Tesla's Dojo Supercomputer

Tesla, the world's most valuable car brand, has repeatedly stressed over the years that it is not solely a carmaker but a technology company at its heart. It, therefore, shouldn't come as a surprise that the company built the world's fifth most powerful supercomputer in 2021. In 2023, Tesla laid the foundations of a dramatically more powerful version called Dojo. But before one digs into the details of the Dojo, one might ask why Tesla has gone all-in on silicon tech and why it needs all that computing power.

Well, the simplest answer would be to improve its driver assistance tech stack, which includes Autopilot and the Full Self-Driving (FSD) bundle. To recall, Tesla recently started replacing conventional radar sensors in favor of traditional RGB cameras. Well, all of that real-world image capture tries to mimic how an average human would perceive the world around them. But training an AI isn't as simple as training a human because everything the cameras see needs to be labeled so that the car recognizes it in real-time and takes appropriate action. That labeling and training involves crunching multimedia assets at a crazy pace, which is worth petabytes of data per day from each car.

Nvidia, which helped Tesla build its first supercomputer, says a fleet of 100 cars will produce more than 1 million hours of video recording each year with just five cameras. Tesla delivered over 1.8 million cars in 2023 itself for reference. Needless to say, the company needs something like the Dojo supercomputer to fulfill all the computing demands. But more than just enhancing Autopilot and FSD tech, which Tesla considers critical for its future, the company also needs it for other ambitious ventures such as the Tesla Bot and improving other aspects of its cars.

The core fundamentals

When Tesla started with the Dojo vision, the idea was to build a distributed scalable computing system with higher bandwidth and reduced latency and achieve that without sacrificing power efficiency and cost-effectiveness. As it embarked on the Dojo engineering journey, Tesla worked on several ideas to speed up the neural network processes, some of which were ultimately rejected in the late stages of development.

One of the guiding principles behind the development of the Dojo was to create a scalable system so that as the AI models evolve in their complexity and need more resources, the Dojo infrastructure can just be scaled up instead of shifting to an entirely new accelerator and computing kit, somewhat like replacing an old GPU with a new one to play a demanding next-gen game.

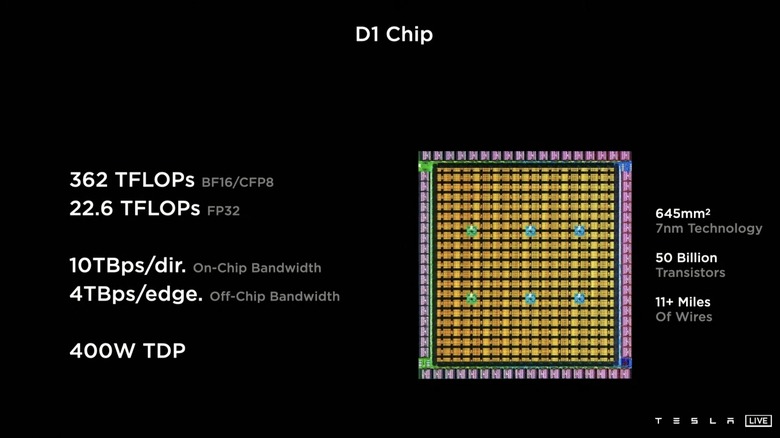

At the center of the Dojo's computing superpowers is the training tile, which integrates a 5x5 array of 25 self-designed D1 chips. The chip, based on the 7-nanometer fabrication process, measures about 645 millimeters square and packs close to 50 billion transistors, allowing it to deliver 362 TFLOPs of compute power.

"This chip is like a GPU-level computer with a CPU-level flexibility and twice the network chip-level I/O bandwidth," said Tesla's Dojo project lead Ganesh Venkataramanan at the AI Day event in 2021. Technically speaking, the D1 can be classified as a superscalar core with a multi-threaded design.

Creating a scalable supercomputer

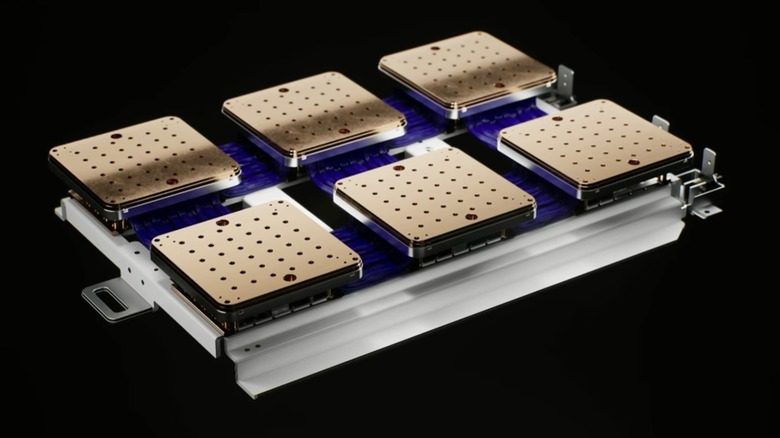

The D1 tile includes a custom voltage regulator module with an area matching that of the silicon die and delivers an impressive power density close to 1A per millimeter square. Tesla also had to work on material science to solve thermal issues on the tiles and even self-designed the cooling cabinet for compute units instead of buying them off-the-shelf to hit the cooling and energy efficiency targets.

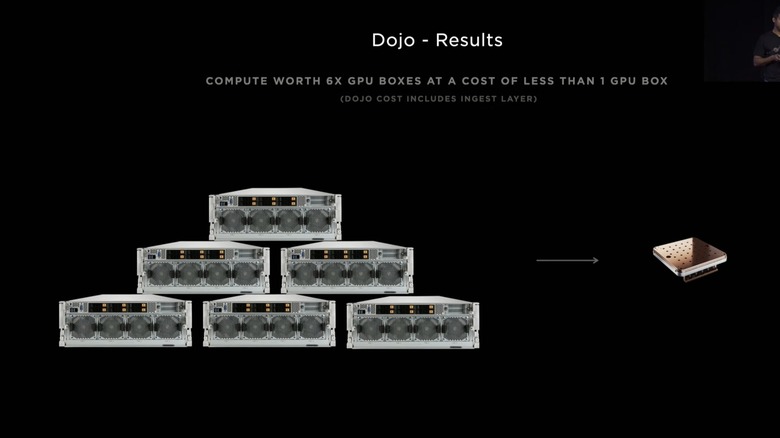

Notably, the company integrated the entire mechanical, electrical, and thermal pieces into a single unit, which is the System Tile. However, the end goal for creating a scalable architecture was the ExaPod, which comes to life at the fundamental level by linking together these tiles at multiple levels. The first stage is the system tray, which puts together six tiles packed tightly to deliver 54 PFLOPS of net output. The next element is the lane for feeding data to these processing units.

Toward that goal, Tesla created the Dojo interface processor with a memory bandwidth of 800 GB/s and runs a custom protocol that the team calls Tesla Transport Protocol (TTP). Other elements include a PCIe Gen 4 interface and an ethernet port with a throughput of 50 GB/s. Each tile tray requires 20 memory carts fitted underneath vertically, delivering 640GB of high-bandwidth DRAM.

For video-based training, a Dojo Host Interface is connected directly to the tray via the PCIe lanes of the DRAM module. The neural network training environment is based on Linux applications in the x86 architecture, effectively putting 586 cores to the task of ingest processing. This entire assembly is then put together in the tower cabinet. Tesla says each Dojo Cabinet can host two such assemblies. Notably, the Dojo Cabinet borrows its metallic angled looks from none other than the Cybertrcuck.

The future outlook

The Dojo cabinets are connected seamlessly to form what Tesla calls the Dojo Accelerator, a behemoth capable of delivering ExaFLOP-class computing power. While working on creating scalable computing hardware, Tesla's team also worked on the software to get the most out of the computing power at hand and optimize the training pipeline, which involves a liberal use of AI models.

"We took the recently released Stable Diffusion model and got it running on Dojo in minutes," a Tesla engineer was quoted as saying during the Tesla AI Day 2022 presentation, adding that the entire process involved only 25 D1 dies. Towards the end of 2024, the company hopes to cross the 100 ExaFLOPs compute barrier with the Dojo ExaPods.

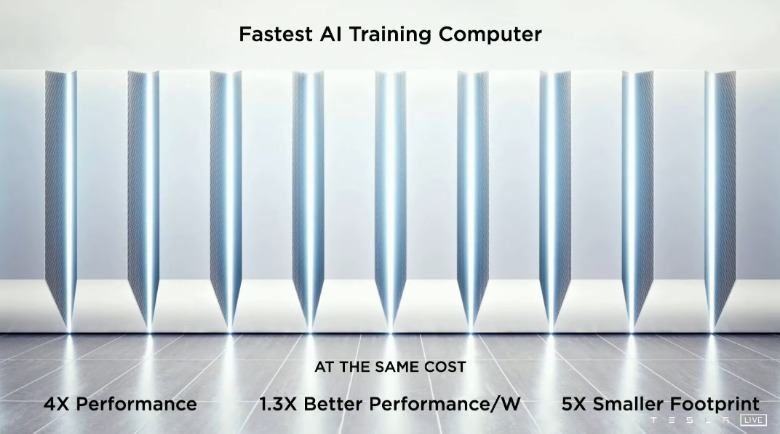

Tesla says the Dojo will fan out to become the fastest training computer on the planet, delivering roughly four times the raw performance output at the same cost in a five times smaller footprint. Moreover, on the energy efficiency side of things, it is touted to offer 1.3x improved output per watt of power intake. In July 2023, when the supercomputer officially underwent its assembly, Musk announced that Tesla would spend a billion dollars on its Dojo supercomputer project.

While the company is apparently a tad behind schedule with the Dojo development at its Palo Alto unit, plans have already been inked to expand its supercomputer footprint under the Dojo branding. In January 2024, Governor Kathy Hochul announced that Tesla would be investing half a billion dollars to build another Dojo Exapod in South Buffalo at its RiverBend gigafactory over the course of the next five years.

A stratospheric rise for Tesla's fortunes?

Interestingly, in June 2023, Musk replied to a user on X (formerly Twitter) that the Dojo supercomputer has been up and running online "for a few months." But despite the costs and risks, it seems the mercurial Tesla CEO is quite bullish about the whole endeavor, and so are the investors. "We are scaling it up, and we have plans for Dojo 1.5, Dojo 2, Dojo 3, and whatnot," Musk was quoted as saying during an analyst call by TechCrunch.

"I'd look at Dojo as kind of a long-shot bet. But if it's a long-shot bet that pays off, it will pay off in a very, very big way... in the multi-hundred-billion-dollar level," Musk was reported as saying by Fortune. Wall Street analysts over at Morgan Stanley predict that Dojo will propel Tesla's market value by roughly $500 billion on its own, taking into account the current wave of generative AI rush across the industry. Notably, Musk has already started an AI company called xAI that recently released its first product, Grok, which is an AI chatbot along the same lines as OpneAI's ChatGPT.

But the transition to Dojo is not happening anytime soon. Soon after Tesla confirmed its investment in Buffalo, Musk mentioned on X (formerly Twitter) that 500 million was definitely a big sum, but the company is lined up to spend a lot more than that on Nvidia's highly demanded H100 GPUs in 2024 alone. "The table stakes for being competitive in AI are at least several billion dollars per year at this point," he added while also confirming that Tesla will be purchasing similar tech from AMD. 2024 seems to be a definitive year for Dojo to flex its muscles, but more official details are yet to come.