10 Common Tech Innovations That Still Felt Like Sci-Fi In The Year 2000

Technology never stands still and is constantly evolving. That means new gadgets, devices, and services are continually arriving to make life easier and more entertaining than ever before. Today, people have the world's entire knowledge base at their fingertips, can watch the latest movies and television shows on the go, and are able to communicate instantly with anyone they know.

A hundred years ago, much of today's tech may well have seemed like magic. However, you don't even have to go back that far to get to a point when the technology we currently use would seem outlandish and impossible. Despite the fact that they are now part of our everyday lives and are commonplace in all parts of the globe, these gadgets and services would have looked more at home in a futuristic sci-fi film than a vision of what was to come in the next two decades to those living in the year 2000.

Smartphones

The telephone is not a recent invention. In fact, the very first telephone patent was granted to Alexander Graham Bell way back in 1869, more than 150 years ago. Throughout most of that time, the telephone remained largely unchanged as a method of sending and receiving real-time vocal communication. There were some advancements, such as the switch from rotary dial telephones to push-button dialing, as well as an increase in sound quality and a reduction in size and weight. However, their main function was basically the same, until relatively recently.

Early mobile phones evolved from cordless telephones and were essentially just handheld devices that could make and receive calls. These quickly advanced into more compact and technologically capable machines that could send text messages, play basic games, and access functions such as notes, calendars, and alarm clocks. But even by the year 2000, these mobile phones were severely limited in terms of their functions and there was little indication of what was to come.

Although there were a few early examples of what could be considered smartphones, like the IBM Simon in 1994, these were incredibly expensive and only made in very small numbers. This all changed in 2007, which saw the release of the iPhone. This smartphone featured a multi-touch capacitive touchscreen, could access the internet through the Safari browser, and had access to an app store filled with near-limitless software downloads. These new smartphones effectively act as tiny computers that can do everything from streaming content to instant messaging, becoming an indispensable part of our lives that seemed impossible just a few years earlier.

Social media

Social media is now a huge part of most people's lives. It provides a way to post photographs and videos where people can see them, share important events with friends and families, and keep in contact with all those we care about. There are dozens of different social media sites and apps out there, with the most popular examples being Facebook, Twitter, Instagram, and WeChat. Yet, the very idea of social media would have seemed foreign to people in 2000, a time when only around 50% of people in the U.S. used the internet at all.

Considering how important the internet is, it makes sense that social media sites and apps didn't properly take off until the technology was in place to support them effectively. The precursors to the social media experience of today came with sites such as SixDegrees.com and Classmates.com, early sites that allowed users to sign up and connect with friends. However, they were basic in terms of functionality, often only allowing users to post text updates or send messages, and were not widely used.

It wasn't until around 2003 that social media sites really began to take off. The likes of Friendster and MySpace were some of the first examples of this, allowing users to express some of their own personality on profile pages and interact with their friends or family members online. Facebook quickly followed, and social media companies quickly rose in popularity, eventually coming to dominate internet usage around the world.

GPS maps

It was not all that long ago that people had to rely on paper maps if they were traveling or couldn't find a location they were looking for. Passengers in cars often acted as navigators for the driver, while tourists could be seen in cities around the world with folded maps telling them where each major landmark and sight to see was located. That's no longer the case, though, with digital maps almost completely replacing paper maps – at least for everyday use anyway.

One of the key pieces of technology that enables modern map apps is the Global Positioning System (GPS). Using satellites and radio waves to triangulate the position of a receiver, GPS is an accurate and fast tool for determining an object's location. Meanwhile, digital map software has its origins in the 1990s when companies such as MapQuest began offering online services that would give users customized directions. These websites essentially took a paper map and built computer systems that could pinpoint specific locations and find the best route based on the known data about the roads in question.

Combining both of those technologies has allowed developers to create real-time GPS digital maps that are available on practically any smart device via an app or website. They can see exactly where you are, provide instant updates about traffic, and allow users to set waypoints or choose how they want to travel. Of course, they are also far quicker and easier to use than any paper map ever could be, and would have looked like something only possible far in the future to people living in the year 2000.

Contactless payments

Up until the early 2000s, the main ways that people made payments were cash, check, and credit and debit cards. The cards worked by being swiped on a magnetic strip and the customer signing a receipt to verify they were the owner. In the UK and various other European nations, chip and pin cards — which feature an electronic chip that stores all the bank details — began to roll out after the turn of the millennium. However, the majority of customers in the United States continued to use the old method, even up until 2014.

By the late 2000s, though, a new form of payment method was being introduced. Contactless payments use near-field communication (NFC) or radio-frequency identification (RFID) to allow users to wave their credit or debit card over a payment terminal without having to enter a pin or sign anything. It is a quick and efficient way to make small purchases, although it might have looked like magic to people living in the year 2000.

Nowadays, you don't even need a physical bank card with you to make a payment. Smartphones and smartwatches have their own NFC chips that can make secure payments wirelessly. The likes of Apple Pay can sync your payment details across various devices and may even be more protected, as they require biometric data (such as fingerprints) or PIN codes to work when making a payment. Very few people would have imagined 20 years ago that you'd be able to pay for your shopping at a terminal simply by holding up your phone or watch.

3G and other wireless internet

The very idea of wireless internet that is always available is something that people living in the year 2000 would have laughed at. After all, this was a time when the vast majority of people using the internet were doing so through dial-up access. This required users to connect their computers and other devices to telephone landlines and was notoriously slow. The introduction of broadband in the early 2000s changed that and brought much higher speeds that made streaming and online gaming possible.

Around the same time, Wi-Fi was just beginning to be introduced to the world of computing, allowing for wireless internet connections around the home or office. This was followed by 3G, a wireless mobile telecommunications technology that allowed for portable internet connections. It was initially developed and introduced in 2001 but didn't see widespread adoption until the late 2000s and early 2010s, at the time when smartphones first started to become popular.

3G has since been superseded by both 4G and 5G technology, which are faster and more reliable. They make it possible to stream content from providers such as Netflix or Spotify seamlessly and stay constantly connected to the internet. Yet, to those using dial-up internet in the early 2000s, the very idea of being able to walk around with a continuous internet connection would have seemed fanciful.

Virtual reality headsets

To those growing up in the '80s and '90s, virtual reality was something that was only really part of popular culture as a trope in movies. Think of films like "Hackers," "The Matrix," and "Tron" that showcase virtual reality as some kind of futuristic technology that is only possible either for the rich or for massive organizations such as the U.S. military. The idea that people would be able to use virtual reality headsets as part of their everyday lives just wasn't something that people considered.

Of course, that doesn't mean there were no attempts to create virtual reality hardware for the general public. However, high-profile flops such as Nintendo's Virtual Boy showed just how far technology had to go before a convincing and workable virtual reality device could be mass-produced. Virtual reality was, however, used by NASA as part of its training and experiments.

Yet, just a decade after the start of the 21st century, companies were developing affordable VR headsets. An increase in the graphical and processing power available allowed Oculus, HTC, and others to create VR headsets that people can use to play games, explore far-off locations, and even complete work. Recent releases, including the Apple Vision Pro, promise to integrate digital media with the real world in exciting new ways.

Artificial intelligence

Until the last few years, artificial intelligence has not been something that most people were concerned about. Outside of prophecies and warnings from some about the potential future dangers of AI, it was a thing that only became part of our consciousness thanks to appearances in films like "The Terminator," "2001: A Space Odyssey," and "Blade Runner." Here, artificial intelligence brought life to human-like robotic creations, or controlled vast systems and automated infrastructure.

That made AI feel like something that was a very long way off, possibly even generations away from becoming a reality. While the artificial intelligence of these films hasn't materialized quite yet, we are much closer than you might think. AI technology has already been woven into our lives in ways you might not realize. Streaming services use artificial intelligence to provide recommendations to users based on their viewing habits, for example.

But AI has really jumped into the forefront over the last couple of years thanks to the introduction of generative and creative tools such as ChatGPT and DALL-E. These can take prompts and create responses or images as if you are having a conversation with a real person. Meanwhile, virtual assistants such as Alexa and Siri are able to answer questions and perform tasks after receiving instructions, not too dissimilar from HAL 9000 in "2001: A Space Odyssey."

Self-driving and electric cars

If there's one thing that movies and television taught us, it is that the future will be filled with advanced cars that are able to drive themselves, operate without gasoline, and even fly in the air. At least, that's the depiction in the likes of "The Jetsons" and "Back to the Future," with the futuristic vehicles playing a big role in demonstrating how technology would drastically change. Obviously, we don't have hover cars or personal flying vehicles that we use every day, but self-driving and electric cars are very much a reality.

The idea of an all-electric car in the year 2000 wasn't something that was seen as a genuine possibility in the near future. After all, less than a decade earlier, mobile phones such as the IBM Simon needed to be charged for half of the day for just one hour of use. But in the early 2000s, some electric cars started to be developed, including the 2008 Tesla Roadster. Yet, these models were expensive and only produced in small numbers. It is only in the last decade that electric cars have become more widespread, although they still have some major flaws.

Self-driving cars, or autonomous vehicles as they are sometimes known, are cars that are capable of driving on roads without direct input from a human. Everyone from Alphabet's Waymo to Tesla and General Motors are working on self-driving cars, which can offer a variety of benefits when it comes to safety and congestion.

Streaming

The way people consumed media in the year 2000 was pretty much the same way they had done for decades. While DVDs and CDs gradually replaced VHS and cassette tapes, the vast majority of people who wanted to buy a movie or listen to music did so through physical media that they bought or rented. That began to change after the introduction of broadband in the early 2000s, as the increased internet speeds allowed for digital purchases to become a reality. The first iPod was released in 2001 and video-on-demand services began to appear online.

However, the real content revolution came in the late 2000s with the advent of streaming. YouTube was one of the early streaming sites and helped popularize the idea of streaming content rather than downloading it for offline playback. Netflix soon transitioned from a DVD rental service to streaming television and movies, while Spotify offered music streaming.

Combined with the adoption of smartphones and other smart devices alongside the introduction of wireless internet technology, streaming made it possible to access almost every piece of content from anywhere. This ability to instantly watch or listen to anything you want, wherever you are, is a concept that was alien just a few years before it was introduced.

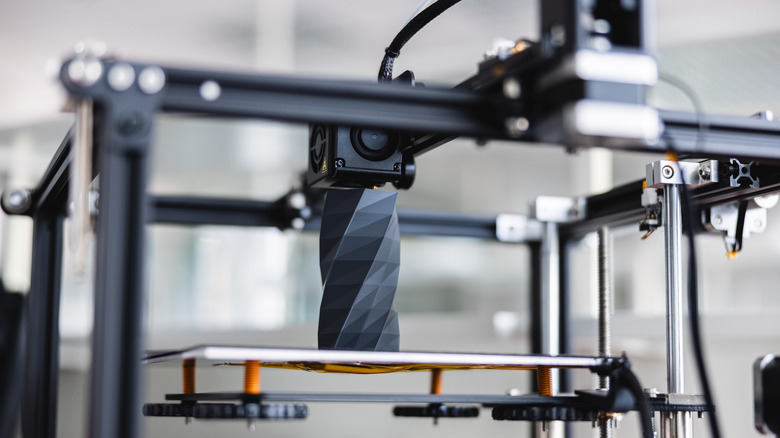

3D printing

3D printing is far from a recent technology and can trace its origins back more than five decades. During the 1980s, early 3D printers were used as a form of rapid prototyping, giving designers the opportunity to create models or prototypes quickly. The technology at this time wasn't suitable for mass production and was only employed by specialists. However, the basic process was the same, with printers layering various materials in slices that build up and join together to create objects.

Although most people were probably completely unaware of 3D printing, the concept did show some promising practical uses, especially in medical settings where it could be used to create custom body parts for patients. As parts became cheaper and technology advanced, 3D printing became more viable for consumer use and in the 2010s, the process started to be used more widely.

Now it is perfectly possible for anyone with a 3D printer to begin printing their own models. Need a particular replacement part? You can simply find it online and print it out. Want to design your own prototype or create a power tool? 3D printers make that a reality. Yet just 20 years ago this process would have only seemed possible in futuristic sci-fi films.