Everything To Know About Apple's Point And Speak Feature

Over the years, Apple has repeatedly stressed that the best technology is the one that is built for everyone. That reflects particularly well in the diversity of accessibility features that Apple offers. Earlier this year, Apple pushed more accessibility features into its phone and tablet ecosystem, targeting people with visual, cognitive, and speech difficulties.

Among them are improvements to the Detection Mode in the Magnifier app that lets the camera assist people with seeing and understanding the world around them. The most notable feature in this package is Point and Speak, which as the name suggests, is aimed at folks with vision difficulties.

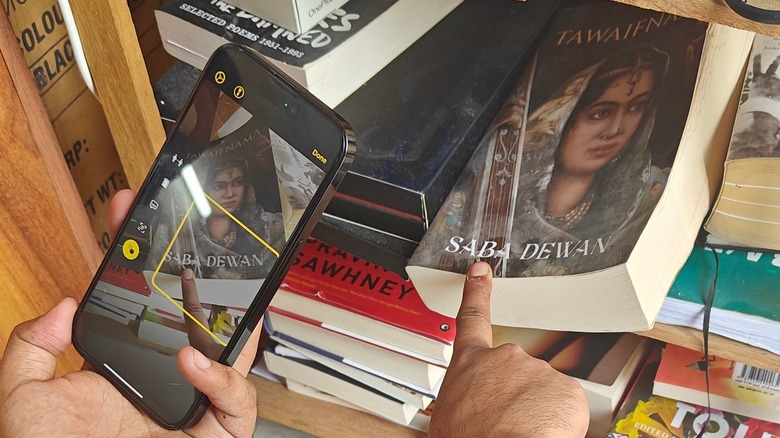

All one needs to do is point the camera at an object and use their finger to focus on a particular element in the frame, and the phone will read the text as well as offer a description. It's akin to a visual and audio assistant for the world seen through a phone's camera, while complex machine learning algorithms help make sense of it.

How to enable Point and Speak?

To use the Point and Speak feature on your iPhone and iPad, it must be running iOS 17 and iPadOS 17. Both versions are now in the public domain, so make sure that you have the latest builds installed on your device. With software compatibility out of the way, here are the steps you need to follow in order to activate the accessibility feature:

- Open the Magnifier app on your iPhone or iPad.

- On the main screen, tap on the Detect mode icon, which looks like a camera frame icon alongside the right edge of the screen.

- Once you enable Detect mode, tap the Point and Speak icon on the left. It looks like a finger on a wall of text.

- Now, move your phone close to the words printed on an object you want to read.

- Next, with the index finger on your other hand, point at the text you want the phone to read out loud.

- The phone automatically creates an on-screen frame around the object that you are pointing with your finger, and it will read it out loud.

For best results, clear the lens of your phone's camera and make sure that your hands are not shaky. It's normal to hear a few audible cues telling you to keep your phone steady for more accurate object and text recognition.

Accessibility limited by access

Apple says that Point and Speak is a combination of machine learning algorithms and inputs received from the camera and LiDAR. It captures depth information in videos and photos. Apple opened the sensor to third-party clients with the arrival of iOS 15.4, allowing them to develop apps and experiences that can make use of the LiDAR sensor.

For example, using the Measure app, you can assess a person's height and the width of an object in the frame, measure the distance between two points, and more. It also assists with faster auto-focus for low-light photography, testing out AR furniture at home, and playing AR games, among others.

But despite its immense potential, it is quite limited in its scope. That's because it's only available on a handful of Pro iPhones and iPads, which tend to be quite expensive.

At the moment, due to the LiDAR limitation, only the following devices support the Point and Speak feature:

- iPhone 12 Pro or later

- iPad Pro 11-inch (2nd generation) or later

- iPad Pro 12.9-inch (4th generation) or later

More accessibility features to try in Magnifier

The Magnifier app offers many helpful features to assist users in their day-to-day lives, and most of them don't require a LiDAR sensor. For example, if you come across text that is not legible, the Magnifier app can apply filters, add more light from the LED flash, and adjust brightness and contrast levels to make the text readable. You can even save the frame locally for future reference.

Then there is People Detection, which lets you keep a safe distance from people around you, especially in scenarios with external risks like contagious diseases or other such proximity-related risks. The Magnifier also has a door detect feature that recognizes doors and tells users how far they are reaching them.

In the same vein as Point and Speak, the Magnifier app also offers an image recognition feature that lets you scan the world around you and get the objects recognized with text and audio descriptions. Think of it like Google Lens, but geared towards assisting people with accessibility needs.