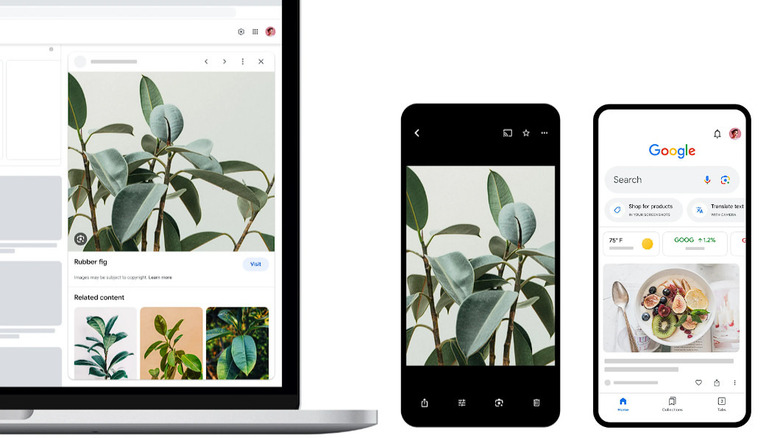

11 Essential Google Lens Hacks Every Android User Needs To Know

Lens is one of the coolest things Google has going on, and it seems that very few people are paying it much attention. It's taking a branch of machine learning called "computer vision" and refining a bunch of earlier Google efforts like Google Glass, Google Now, and Google's aborted Tango project. What comes out the other end isn't merely a hyped-up image search but some truly intuitive and profoundly useful capabilities.

It's fair to say that Lens is a work in progress, but that phrase probably gives the wrong impression about its completeness and usefulness. If history is any indicator, Google will continue to improve Lens until it is spectacular and indispensable and then cancel the project altogether. Until that happens, you should make the most of it.

Lens exists as an app for Android and iOS, but there are other ways to get at most of its functionality. On a desktop computer, you can find a slightly impoverished version integrated with Google Photos, and it can be enabled within Assistant. Lens is also directly available within some camera apps for smartphones.

Google Lens would like to know more

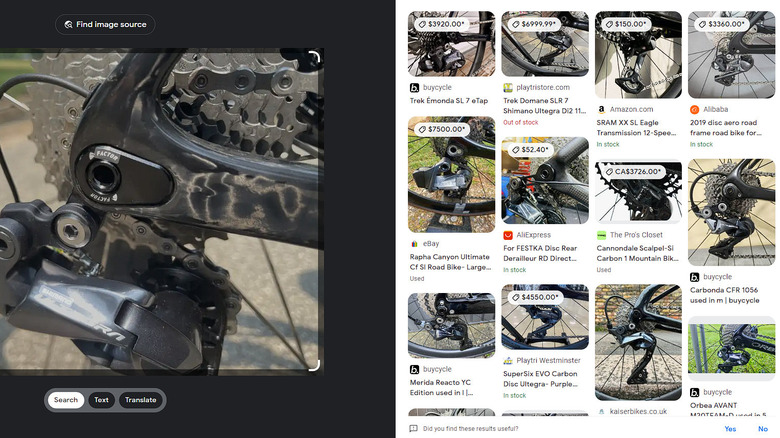

It's somehow both obvious and counter-intuitive that you can refine your Lens image search by simply adding a text prompt to detail what you're looking for. One of Google's own examples of this is a photo of a bicycle's gear cassette and derailleur. The search changes completely when you clarify with the text "how to fix this."

This relatively new Lens functionality, circa 2022, is called multisearch, and it's a key component of making Lens useful in a lot of circumstances. By itself, image searches can be ambiguous; a photo of a camera could be taken by someone looking to buy a camera, someone trying to understand how to use a camera or someone who's never seen a camera and is wondering if it's some new kind of phone. Text can be a lot clearer for most people and all computers, so it's a natural way to disambiguate your queries, assuming your moral tradition allows you to disambiguate your queries.

[One-half of the featured image by Glory Cycles via Wikimedia Commons | Cropped and scaled | CC BY 2.0]

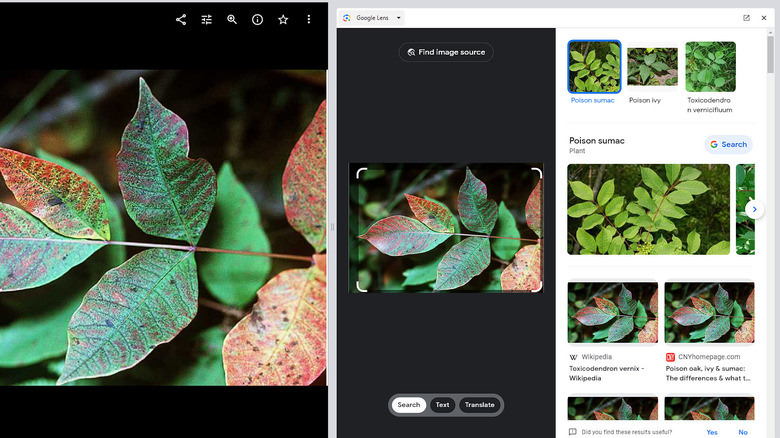

That plant you like is poison sumac

Plant identification used to be a matter of buying $200 worth of plant guidebooks and then asking someone when you couldn't summon the energy to thumb through $200 worth of guidebooks. Being lazy is even easier now: just snap a photo and consult Lens. (Easy is the best kind of lazy, after all.) Next came a handful of apps that were almost great at identifying plants and critters. And all of this has culminated, so far at least, in Google Lens.

The beauty of Lens as an identification tool is that it works on insects, birds, pets, and zoo animals, too. Everything, really, because it can do dresses, microbes, and cave paintings as well. But field identification of living things is a category unto itself, and Lens happens to be great at it. Is that bug a termite? Why do people keep calling that little bird a chicken when it looks nothing like a chicken?

And is that a polar bear or some kind of dog in my neighbor's yard that menaces absolutely everything that moves on our street? Some of these are pretty important questions, and assuming your neighbor doesn't mind your taking photos of his Great Pyrenees, Lens is there to help you. (For the record, the chicken is a modern game hen and yes, you have termites.)

[One part of the featured image by Robert H. Mohlenbrock @ USDA-NRCS PLANTS Database / USDA SCS. 1991 via Wikmedia Commons | Scaled | Public Domain]

You don't need your reading glasses

Don't skip this one. You don't need to suffer from age-related presbyopia to benefit from Lens and its ability to read tiny text and even act on it. Have it read the minute text on an AC/DC adapter, for example, or zoom in and identify that pendant Bon Scott is wearing on an AC/DC album cover. It will not, as far as we know, read and help you understand the small print in end-user licensing agreements. But no one expects you to understand that small print, anyway.

The best such use we've heard of is scanning the login sticker on the back of your Wi-Fi router and having Lens log in automatically. You might want to do this to set up a guest Wi-Fi network or add a VPN. If it works, this is a true miracle of technology and a boon to those of us with aging eyes or dispositions incompatible with reading and then typing the utterly insane strings of characters some router manufacturers use as default passwords.

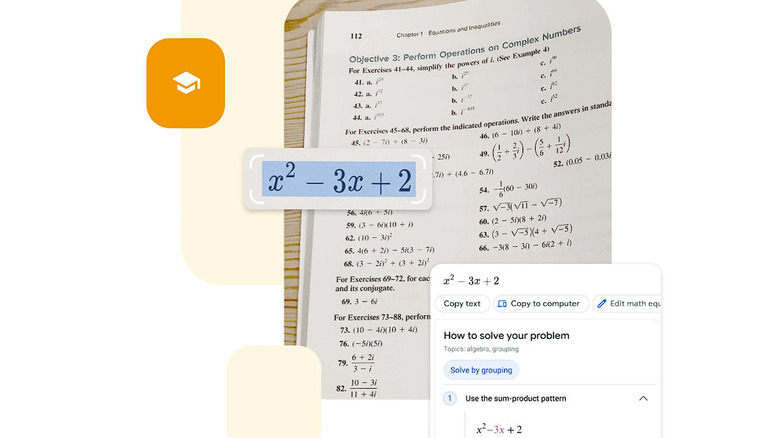

Get help (not just answers) with your homework

Lens is known to be quite capable when it comes to solving handwritten math problems. But if you're interested in actually passing the eventual exam, you can also get targeted help with math, history, and science using the built-in homework help filter.

As an AI-adjacent technology, Lens occupies a space people aren't altogether comfortable with. In that, it enables its own misuse, like a 1.8-trillion-parameter large language model or a calculator app. Lens uses Google's Socratic platform for learning all manner of topics and provides step-by-step guidance and detailed explanations for all types of math problems, including hatred of mathematics (which it accomplishes by ultimately giving you all the answers).

If there's more than one way to accomplish a math task, Lens will show you how to do each of them. This functionality and numerous other tools emerged, according to Google, from the COVID-19 pandemic, which forced parents into roles as teachers and eventually forced students back into classrooms when they weren't necessarily still prepared to pick up all the subjects where their parents left off.

What Lens can't do is tell you whether the American "math" is better than the British "maths." But it hardly matters since your computer is telling you the answers anyway. Like they say, "math... not even once."

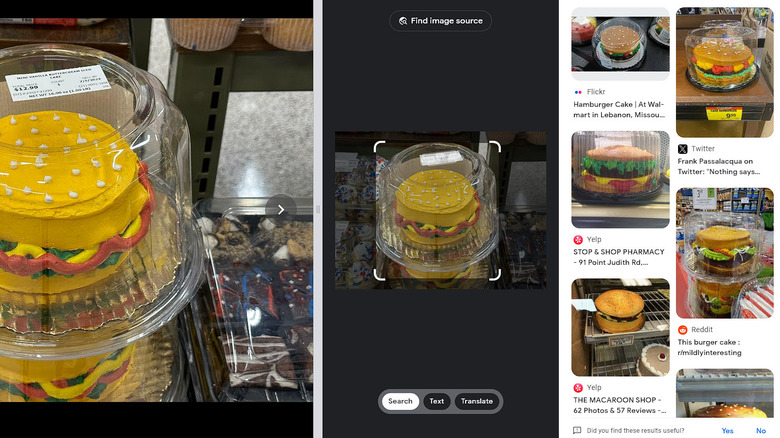

The hamburger cake is not a lie

YouTuber Japancake, a former Google employee who worked on Lens and might have a thing about cakes, shows what happens when you run a picture of a cake that looks like a hamburger through Lens. Amazingly, it recognizes the hamburger cake and shows what others have done in the art form.

What this tells you is that Lens is a great tool for exploring the curiosities that make life interesting. It's accurate enough to distinguish a burger cake from an actual burger (or standard cake, for that matter) and complete enough to track down many other examples of hamburger cakes, along with some recipes.

Lens can help you understand why people do the quirky things they do and can help you out if you have some quirkiness of your own to have a go at. It's a great way to take one example of a thing, even an unusual thing, and dig deeper into all the mutations and permutations it can find, leading to a better understanding or at least a better-looking cake.

[One part of the featured image by Phillip Pessar via Wikimedia Commons | Scaled | CC BY 2.0]

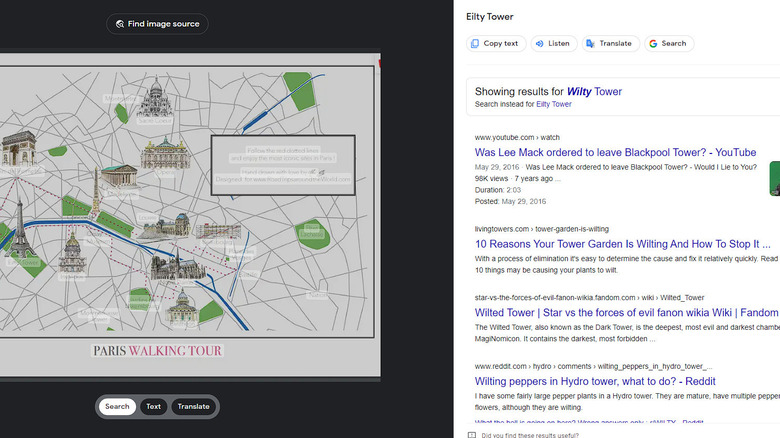

Tourists should be glued to their phones

The augmented reality (AR) aspects of Lens can be truly remarkable. The software's translation function, for example, can happen on your screen in real-time, allowing you to walk through a cityscape, subway station, or market while having your phone translate everything instantly on-screen. The instant translation functionality is fast and good enough that Google Translate now uses Lens to translate text in images.

To try this, you need not travel to a foreign land. Just open Lens, tap the camera icon, tap Translate, and change the destination language to something you're vaguely familiar with. Then point your camera at stuff around your home or office — anything will do. You'll get a good sense of just how quickly Lens translates in AR mode and how valuable that could be for a traveler, whether for business or pleasure.

For kicks, we fed Lens a Paris walking tour map via Google Photos to see how the translation function fared. When Lens could read the words, its translation was spot on, and the information delivered in the search pane was on target. But Lens clearly had trouble with any sort of visual interference, which led to errors like misreading "Eiffel Tower" as "Eilty Tower" and then suggesting "Wilty Tower" instead, as if Eilty Tower was a typo we had made.

Making sense of what you're seeing

Searching your device's screen might seem like an odd goal, but it's a notion with a lot of appeal that Google has been working on, in some form, for more than a decade. Like Google's Now on Tap (circa 2015), Lens can look at your device's screen and offer details and context about whatever it finds there. Unlike Now on Tap, which failed to deliver, Lens' functionality seems to be fulfilling its promise.

This one was a little finicky to get started. You have to invoke it from Assistant, which can overlay other apps. After a few moments of fiddling with Assistant settings, we got a Lens button on the default Assistant "How can I help?" screen. Tapping that allowed us to search our device screen. Initial results tended toward the obvious at first. On a screen with a Kali Linux story and a stock image of a guitar, Lens suggested a bunch of info about the Kali logo itself and somewhat random guitar-related links.

When we refined the search with text, things got better, but one shortcoming became obvious. Lens via Assistant apparently uses screenshots to search, in effect ignoring interactive content on the page. This includes links on web pages, which seems like an unfortunate source of information for a company like Google to ignore.

Remote control your sister's term paper

Think of all the ways this could go wrong or very, very right. You can view some text in Lens and copy it to the clipboard... on your computer 2,000 miles away. It might be useful for capturing text from a book and pasting it into a research paper, but it might also be useful for some epic pranks.

In a less mischievous mode, the "copy to computer" functionality has some great real-world applications and takes a direct swipe at Apple's universal clipboard. Find the information you want to include in a term paper? Want to take note of nutrition information for later review? Speeding up your espionage operation by communicating secret documents via text rather than images? Snap a pic, copy the text, and send it to your computer. You need only be logged in on Chrome on the receiving computer for this to work.

This feature also works with hand-written content, although its accuracy is a function of how clear the source writing is. Without a scanner, how else are you going to get all of the poetry you wrote as a teenager onto your Facebook page?

Get an informed guess about your health

Google Lens natively attempts an informal diagnosis of whatever skin condition is freaking you out by applying AI comparing your photo of your skin to all the skin it's ever seen, including a bunch with definitive diagnoses. But Google is quick to point out that you aren't getting a definitive diagnosis here, just the benefit of someone else's.

The technology seems to be related to Google's DermAssist device, which is certified for use in the EU but, as it's not (yet) FDA-approved, is unavailable in the U.S. The Lens skin condition functionality, on the other hand, is only available in the U.S., suggesting that Google is trying to get whatever mileage it can from DermAssist's AI technology while it seeks FDA signoff.

The DermAssist product, demoed by Google at I/O 2021, predictably had a lot more functionality than they've included in Lens. But Lens is not a medical device, and DermAssist's functionality can only improve with data gathered by Lens.

Let Lens narrow your choices

Faced with a shelf of almost anything, it's easy to find yourself in a sort of decision vapor lock. Sometimes, you can shake yourself out of it, and sometimes, you won't bother, but it seems obvious that our decision-making process is inefficient when we're faced with too many similar choices. (See also: online dating.)

One aspect of Lens's "scene exploration" feature is that you can photograph a bunch of similar objects and then start narrowing them down by adding keywords. For example, a dad baking cookies for a lactose-intolerant child might wonder which products in the baking aisle don't have milk. Just snap a photo of all the options and start telling Lens you want the dairy-free ones (and any other refinements you want to specify).

It's not hard to see how this can help with comparison shopping, selecting your summer vacation books, or lots of other scenarios. If you're ever in the market for a used car, just think how much your experience could be streamlined at a car lot by simply snapping a shot of your available choices and letting Lens do the footwork for you. Bonus: It might freak out the salespeople.

Pronunciate it right

Science of People's list of commonly mispronounced words is like a quick tour of human weirdness. Sure, if you haven't said "açai" wrong at some point, you might be an AI construct. But exactly who is it that's mispronouncing "anyway," "clothes," and "numb?" (Surely Pink Floyd alone eradicated any possible mispronunciation of "numb.") And who, outside of BBC period comedies, even says "flibbertigibbet?" But if you find yourself applying for a job as a salmon fisherman or a nuclear scientist, you're going to want to pronounce those words correctly, and Lens has your back.

Once Lens has overlaid an image with OCRed text, just highlight the word you're curious about and tap Listen. A non-robotic voice will say the word aloud for you, after which you are free to continue saying it wrong for the rest of your life, comfortably confident in your false memory of what Lens told you and numb to the universal fact that what we want to say trumps what we're supposed to say.