Meta's Latest Llama AI Tool Can Fix Your Code Mistakes

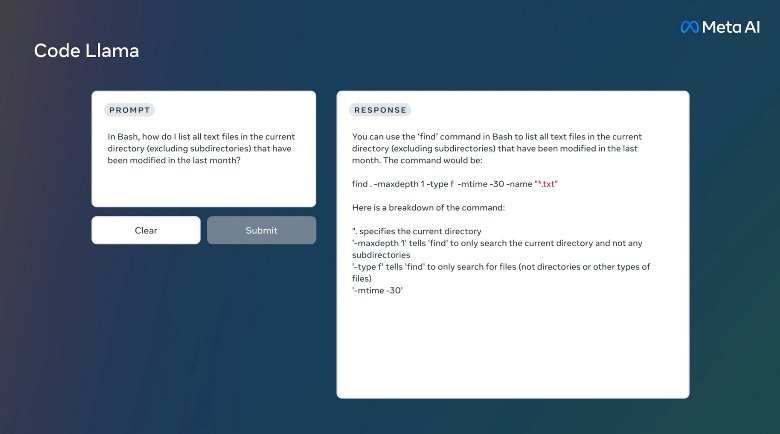

After releasing generative AI models that can handle text content, audio, and translation, Meta has now turned its attention to code. The company has introduced Code Llama, a version of its large language model that is tailored specifically to coding. At its core, this model takes text prompts and turns them into code in the same way ChatGPT and DALL-E2 would respond if asked to write a few paragraphs or create an image, respectively.

Meta won't be the first player to push generative AI smarts into the world of coding. For example, Microsoft recently integrated GPT-4 capabilities into the GitHub Copilot system to assist developers. In April 2023, Google also pushed advanced reasoning and mathematical capabilities for its Bard AI, which allows it to assist with programming tasks like code generation. With Code Llama, Meta wants to let users generate original code and also get help with fixing their existing code.

"It has the potential to make workflows faster and more efficient for developers and lower the barrier to entry for people who are learning to code," says Meta. Interestingly, the prompts given by users can either be in natural language or code snippets. Depending on the nature of the prompt, Code LLama can generate new code, act as some sort of auto-complete engine (like GitHub Copilot), and help resolve bugs. Right now, Code Llama supports some of the most common programming languages, including C++, Python, Javascript, and PHP.

Different models for different needs

Meta trained Code Llama on datasets comprised of, expectedly enough, code snippets. The company claims that Code Llama was ahead of its rivals in popular coding benchmarks like HumanEval. Meta is releasing Code Llama in three sizes ranging: 7 billion parameters, 13 billion parameters, and 34 billion parameters. The lower-end model will be useful for less-demanding tasks, whereas the higher-end model has more demanding hardware needs, but also greater capabilities.

For example, the basic 7B tier can be run on a machine with a single GPU; it is suitable for low-latency tasks like code completion. The 13B model offers a slightly more powerful fill-in-the-middle (FIM) capability, while the 34B variant is for experts seeking advanced code assistance with heavy code generation, block insertion, and debugging — assuming they have the hardware to handle it.

Additionally, Meta created two other variants of Code Llama based on the coding environment. Code Llama Python targets Python, which is one of the most efficient and widely used programming languages for AI and machine learning tasks. The other iteration is Code Llama — Instruct, which is better suited for natural language prompts and aimed at non-experts looking to generate code. Of course, it isn't perfect, though Meta does claim it delivers safer responses compared to rivals. Meta has released Code Llama on GitHub alongside a research paper that offers a deeper dive into the code-specific generative AI tool.