Analog Vs Digital Signals: What's The Difference, And How Do They Work?

The distinction between analog and digital signals is very simple, but it can still be a challenge to wrap one's mind around the concepts that define the two systems. Just when you think you've got it, you run across an argument on some YouTube video that half-convinces you everyone else is wrong.

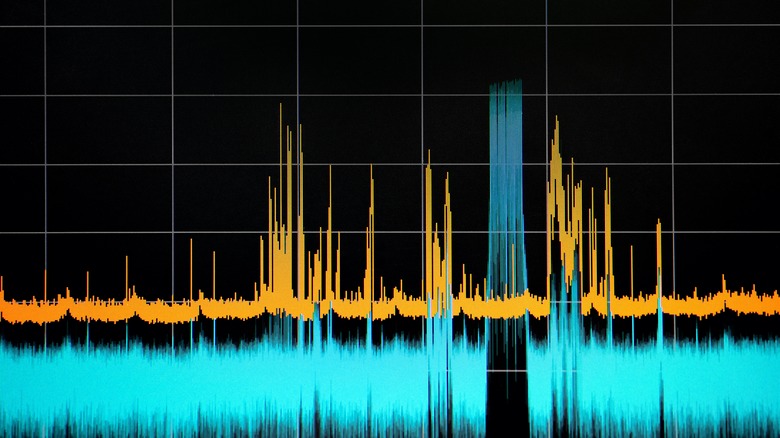

The trouble isn't that it's insurmountably difficult to understand. It's that we're treating these things as mutually exclusive constructs with natural, inviolable distinctions, but it's not that simple. One of the principal facts behind the distinction is that the very form of radio waves is analog, but we know it's possible via amplitude or frequency modulation to encode binary digital values into an analog wave. Doing so might violate our own rules — but not any natural laws. There are many examples, like this digital clock made of Corian and wood acting in a decidedly analog fashion.

All of this is okay because the terms serve their purpose anyway. Analog signals are structured, created, transmitted, and interpreted for the use of analog equipment. Digital signals do the same for use with digital equipment. As long as a signal performs these missions cleanly, they may be considered analog or digital without reservation.

By the way, nothing says that analog or even digital signals even have to be electrical, but in most cases that's the topic at hand, so we'll mostly talk about electrical signals here.

Electrical signals can be either digital or analog

Let's begin our investigation with a convenient lie: electrical signals can be either digital or analog. We lie to you this one time to illustrate a point that plagues most discussions of this topic — explainers have a habit of taking the easy way out, and the easy way is not always entirely true.

The truth, in this case, is that electrical signals can be read as either digital or analog, but the underlying arrangement and flow of electrons is always analog from a certain point of view. This sounds odd but isn't surprising. If you write a string of zeroes and ones on a piece of paper, you will have constructed a digital sequence, but using pencil lines that might be called analog and are certainly not themselves digital. Some argue that you can no more make an electrical signal digital than you can a pencil mark, and this will have very real implications when we later attempt to ascertain what kind of thing digital actually is.

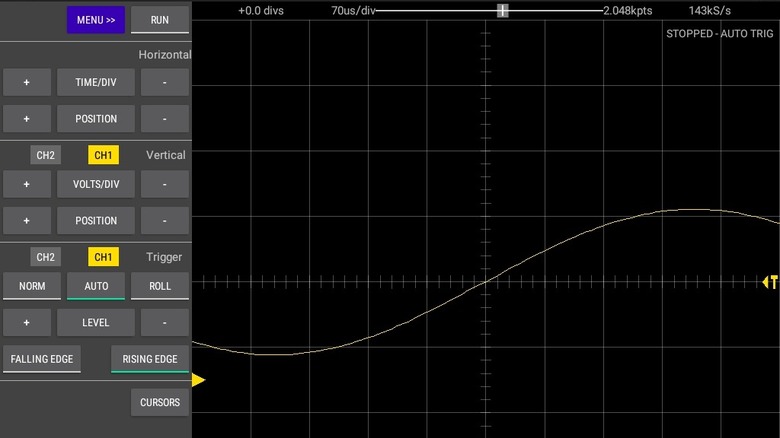

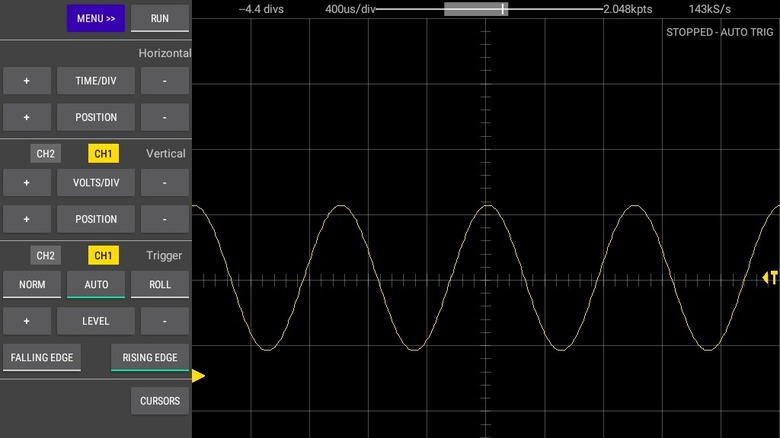

However, there is a common understanding of how signals can be thought of as digital — whether or not they actually are. How these digital and analog signals work is simplified by visualization, like most things. In the case of electrical signals that often means looking at their representation on an oscilloscope, and we're including a couple of screen captures from an oscilloscope in hopes of clarifying things a little.

Analog signals are continuous-time signals

If you're looking at a graphed signal that looks like a smooth (and possibly curved) line no matter how far you zoom in, you're looking at an analog signal. This is true because analog signals are continuous in time and often continuous in whatever value is being tracked — but we'll come back to that.

When an oscilloscope (we used a DIY Raspberry Pi-compatible oscilloscope called Scoppy for this article) displays an electrical signal as a sine wave like the one above, the most basic information it's providing is the amplitude and time period. Amplitude is the maximum value of the signal on the vertical axis, expressed in volts. The time period is shown on the horizontal axis and is the time required for a signal to complete a cycle. You'll often hear about frequency instead of period — frequency is simply the number of cycles that take place in one second, measured in hertz (Hz).

So, analog signals are continuous at any time scale. If you zoom in or out on an oscilloscope (that is, if you change the range of time displayed), the line representing the signal will continue to be continuous from one point in time to the next.

Analog signals have infinite resolution

So what does this time continuity really mean? When we say that you can zoom in on a waveform and always see a continuity from any time to any other, it means you can divide the time an infinite number of times and always see this continuity, and always get a value (voltage or current, usually) for any time.

Try this: start walking toward a wall, moving halfway to the wall every 30 seconds. If you could actually manage to do this with increasingly small distances, you would never reach the wall. This is because the analog values can be divided infinitely. This does not mean there's necessarily any value in such high resolutions. It's likely that you will quickly reach a level of resolution that can't be perceived by living things and can't be used by equipment. Still, in theory, any possible point in time will be represented in an analog signal.

One common way of illustrating this is by using clocks. An analog clock is usually a round device with physical hands that move smoothly around a clockface numbered with values 1 through 12. These are smooth sweeping clocks with second hands that don't tick. So between any two points along the second hand's sweep, you can zoom in infinitely and keep seeing smooth motion. Digital clocks, on the other hand, can only display a limited number of discrete values — say, integers from 0 to 59 or 1 to 12.

Even a clock that can show thousandths of a second will show discrete values, which, at some point, are adjacent to nothing. Similarly, even the highest-resolution digital waveform can never be as detailed as an analog waveform. However, it can certainly be detailed enough.

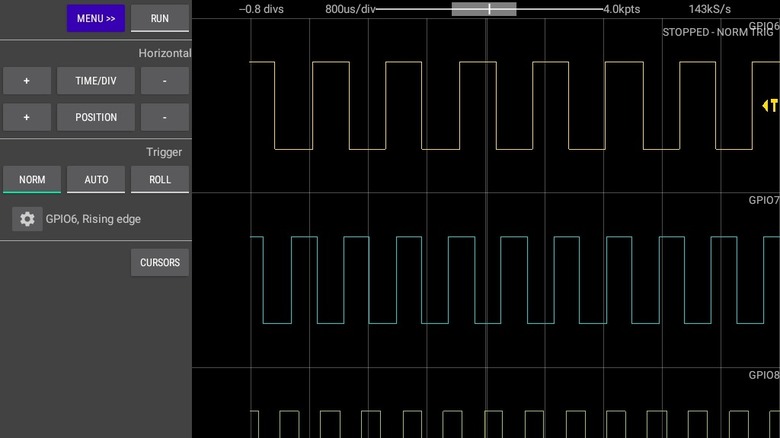

Digital signals are discrete

Digital signals, on the other hand, necessarily have values from a discrete and limited set of possibilities. Digital signals can work in a couple of ways but will always have discrete values. These discrete values could, taken as a whole, approximate an analog waveform but without true continuity. Instead, zooming in on the wave would reveal a stair-stepped jaggedness, where the horizontal steps represent a specific discrete value. In this sort of digital signal, there are a finite set of possible values, but the values might represent a very large number and a very broad range.

Another approach, more familiar to people who think of digital as a series of ones and zeroes, is the square waveform. This looks like a wave but is made of boxy shapes rather than curves. In the case of pulse-width modulation (PWM), a technology for controlling many things like LED lights, each of these boxes represents an On state. When the value retreats to near zero and no box is present, that's an Off state.

Digital equipment like servos and LEDs are controlled with PWM. What's interesting about PWM is that the On state represents 100% power and Off represents 0% power. So the brightness of an LED is controlled by the amount of time spent in the On state. If it's on exactly as much as it's off, it's said to have a 50% duty cycle, and the bulb is somewhere between fully bright and fully dimmed. The fact that there are a limited number of possible values (on and off) makes this a digital signal.

Digital is not the same thing as binary

Those people we spoke of who think of digital as a series of ones and zeroes are often right but are fairly often wrong as well. The discrete values in a digital signal don't have to be ones and zeroes, or On and Off, to meet the requirements of digitalness. However, we often think of digital as equivalent to binary, for which we have computers to blame.

Binary (base 2) numbers are a very clear distillation of what it means to have a discrete set of possible values. This is how the vast majority of modern computers work, and the 1/0 (transistor on/transistor off) duality makes it possible to store and manipulate data with minimally complex components. A signal that includes binary data is an example of a digital signal, but there are many others.

One such non-binary digital signal is Morse code, along with similar signals beamed from Earth to track down aliens of unknown analog/digital designation. Morse code, the dots-and-dashes method of communicating with telegraphy. But wait, you're thinking, dots and dashes are two states, so it is binary. Well, you wouldn't be alone in claiming this, but Morse code has at least three states — dot, dash, and off (silence, the absence of dots or dashes).

So why all the confusion?

It might have already occurred to you that the distinction between analog and digital signals is plagued with vagueness, exceptions, and alternatives. This sort of imprecision is often the sign of a flawed concept, but in this case, it usually has to do with confusion over terminology.

Take the very idea of a signal, for example. A signal carries data, and as such it represents some other thing, potentially in a handful of ways. A digital audio signal, for example, will contain information about frequency and amplitude and possibly a lot of other things, depending on the digital format. However, the data is not necessarily encoded in the signal in the same way that equipment using or storing that data might encode it. A digital signal can be binary, but it can also be a much larger set of values, so long as it's a finite set. Otherwise, it's analog.

Conceptually, it's natural to wonder if it's actually the interpreting devices that make a given signal digital or analog rather than a clearly defined set of characteristics of the signal itself. Engineers will usually vociferously reject the idea of digital as an interpretive overlay that's applied to a signal by the equipment that is interpreting that signal. Still, it's a point of view worth considering, especially given that all electrical signals are analog in a sense, and some are simply shaped in a digital fashion.

Think back to the clock we discussed earlier, but make it a ticking watch this time. Since it appears to have discrete values, at least in seconds, does that make it a digital device? It is, to some degree, a matter of perspective.

Digital circuits are useful

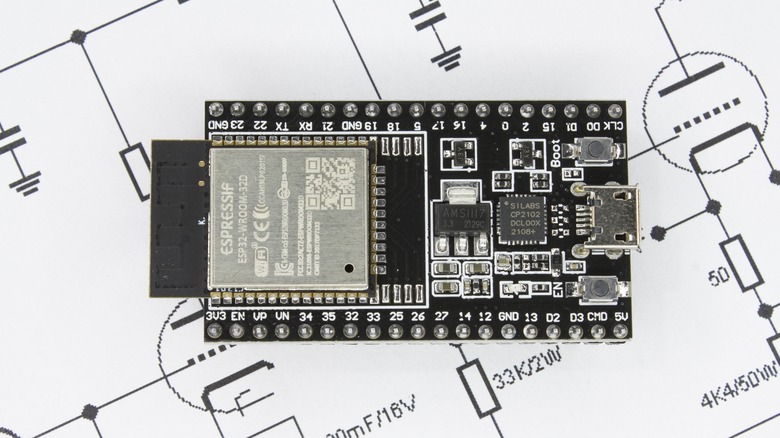

So, if you can have analog computers and analog signals that naturally contain more information, what's with all the digital tech? There are a lot of reasons, but the key is probably the simple fact that it's far simpler to accomplish most things with circuits and gear that act on digital signals. There are programmable analog devices, but they're immensely more complex than their digital counterparts. You can easily process and modify digital signals directly with programming rather than analog circuit wizardry, which is far more difficult and expensive.

Perhaps even more importantly, digital signals suffer from less interference than analog signals. More precisely, they suffer less from interference. The underlying electrical signal is as susceptible to interference as any other. However, the clarity in the discrete values of a digital signal insulates it from the sorts of noise, misinterpretation, and shifts that can foul an analog signal with too much interference.

Another benefit is that it's easy to make exact copies of digital data, so perfect copies can be made very cheaply. This is important if you still buy CDs, but it's even more important if you need to back up your PC.

Converting from analog to digital destroys information

Speaking of CDs, some will argue, quite loudly, that digital isn't necessarily better. This debate once raged through the audiophile community, where drama and disagreement are the order of any given day. The issue is that information can be lost when data is encoded digitally. Due to the need for discrete values in digital signals, any subtleties contained in the continuous, information-rich curves of a soundwave, for example, will necessarily be simplified.

Remember how we said that analog signals have infinite resolution? Since digital signals do not, it's necessary to lose some information when you convert from analog to digital. In addition, it's also necessary to interpolate some new information when converting from digital to analog. These conversions are typically done by components called analog-to-digital converters (ADCs) and digital-to-analog converters (DACs). The more precision with which an ADC or DAC can act on the information, the less information is lost and the higher quality of the output.

Which is better?

For any given purpose and intent, it's possible that either analog or digital signals could be the better-suited alternative. We tend to think of digital technology (and therefore digital signals) as a better fit for our needs because it evolved from those needs, but that doesn't necessarily hold up to interrogation. It's a peculiarity of the analog signal that causes it to require less bandwidth than inferior digital signals, so analog is generally preferred as a mode of transmission for audio and video.

When data naturally lends itself to discrete values (and most data does), it's far more efficient to encode, store, retrieve, and manipulate it digitally. A mere quarter-century ago, some facilities stored video data in great libraries of magnetic tape that were moved about robotically. Today, everything about that approach is thoroughly outdated, and no one would even consider a mechanical solution to video storage when far better digital solutions are at everyone's fingertips.

That doesn't mean we don't pay a price. Certainly, there are aesthetic costs to the digital revolution and some more concrete ones as well. For example, some claim that information retention isn't nearly as good with e-books as with actual paper books. That might keep books around for a while, but it's hard to believe we won't eventually make e-book readers good enough to erase that difference.