5 ChatGPT Myths You Should Stop Believing

Humanity has long sought to alleviate work using advances in technology. Luckily, new innovations have made people way more productive than their ancestors, with no signs of slowing down. If anything, technology is starting to hit milestones that many are unprepared for with the advent of OpenAI's ChatGPT and other artificial intelligence programs. Many also believe that ChatGPT threatens many different kinds of work, from data entry to writing, though these fears might be exaggerated.

ChatGPT is considered a chatbot, which means that it analyzes writing and uses predictive algorithms to generate an output. This means that ChatGPT can write resumes and cover letters, create jokes, explain subjects, tangle with complex mathematical problems, write music, generate and check computer code, and craft essays and articles. However, there definitely are limitations when it comes to ChatGPT's capability and smarts, while there are many myths that have arisen due to the tool's popularity as well.

Some believe ChatGPT is self-aware and sentient

One of the biggest recurring trends in science-fiction media is technology that becomes self-aware. Probably the biggest myth regarding ChatGPT is that the artificial intelligence is sentient, and we will soon see the rise of killer robots. Joking aside, ChatGPT is not sentient, though it makes sense as to why some might believe it to be so.

ChatGPT is based on a language model and utilizes machine learning, meaning that it can create responses that seem very human-like at first glance. However, ChatGPT just synthesizes already available information, and is incapable of independent thought.

That isn't to say that ChatGPT remains static, though, as more and more information becomes available online, which the program aggregates into its repertoire. ChatGPT lacks consciousness and feelings, and as such, isn't actually aware of what it is truly writing. Despite this fact, ChatGPT often refers to itself in the first person, which certainly gives the illusion of a real intelligence.

In addition, ChatGPT can pass the Turing Test, a means of qualifying whether or not artificial intelligence can pass as a human that was developed over 70 years ago. People really can't be blamed for thinking that ChatGPT is capable of true thought.

Others think ChatGPT is accurate all the time

Considering ChatGPT is built off language models and machine learning, some might believe that ChatGPT is unerring in its output, though this itself is also a myth.

ChatGPT trawls the internet in order to create whatever is asked of it, though due to the nature of it being artificial intelligence, it may struggle with determining fact from fiction when it comes to certain subjects. While ChatGPT can certainly bring back simple answers, asking the chatbot something that requires a bit more nuance will certainly illustrate its issues with accuracy.

Surprisingly, the chatbot itself is actually aware of this issue, and in one conversation it wrote, "When you ask me a question, I analyze the language used in your question and compare it to the patterns and structures of language in my training data to generate a response ... However, please note that my responses are generated based on statistical patterns in the data, and may not always be completely accurate or up-to-date."

[Featured image by Rolf h nelson via Wikimedia Commons | Cropped and scaled | CC BY-SA 4.0]

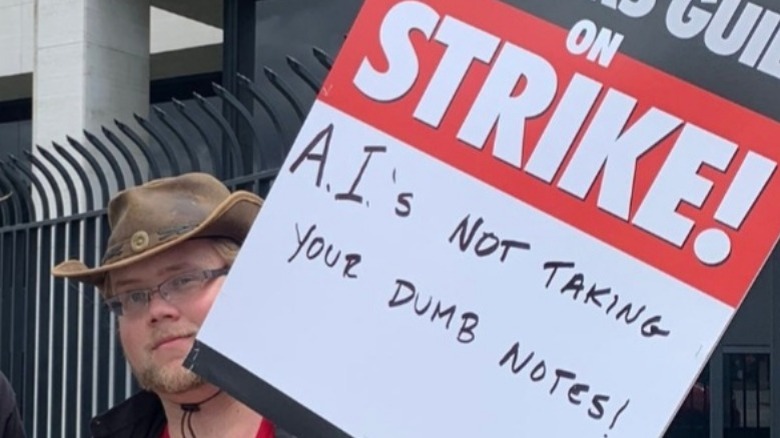

Some worry ChatGPT will take jobs

While advances in technology have always changed work, some believe that ChatGPT is going to make most jobs moot. It is true that ChatGPT has already impacted some labor markets, with Resume Builder saying that 48% of companies that are using ChatGPT have already replaced workers, this isn't the entire picture.

Some jobs have already felt the impact of artificial intelligence, like ChatGPT and automated customer service, and there are jobs that require boring and repetitive tasks like data entry that could be dominated by the likes of ChatGPT and its contemporaries. Most jobs should be relatively safe though, with some professions actually using ChatGPT as a supplemental tool and not a replacement for people.

Even asking ChatGPT if it will replace human jobs invokes an interesting response, with the AI stating, "I am not designed to replace human jobs, but rather to augment and enhance human capabilities by providing them with the information they need to make better decisions and complete their tasks more efficiently. While AI and automation are changing the nature of work, it is important to note that they are tools to be used by humans and not a replacement for human skills and expertise."

[Featured image by David James Henry via Wikimedia Commons | Cropped and scaled | CC BY-SA 4.0]

The belief that ChatGPT will replace human interaction

Some might believe that ChatGPT could replace human interaction, and much like ChatGPT's ability to steal jobs, this idea is vastly overstated and oversimplified. People are filled with emotions, thoughts, and different motivations that stem from experience, and even though ChatGPT is certainly good at mimicking language and human interaction, it lacks the ability to understand what it is writing and producing.

ChatGPT's artificial intelligence can perform tasks within limited parameters, and while it is robust in this job, it isn't what is considered "artificial general intelligence," which is somewhat of a philosopher's stone of technology-imbued intelligence.

Artificial general intelligence would be capable of independent thought, though chances are we are still a bit away from this milestone. We won't be making friends with ChatGPT anytime soon, nor can it provide true compassion and understanding as a fellow sentient being.

In other words, while ChatGPT is certainly a useful tool, it is a pale substitute for interaction. There isn't much of a threat of people losing their unique edge to something like ChatGPT. ChatGPT, in its current form, will never be able to replicate real creativity, intuition, and emotional intelligence.

[Featured image by UNE Photos via Wikimedia Commons | Cropped and scaled |CC BY 2.0]

The theory that ChatGPT is just people pretending to be AI

Perhaps one of the most interesting myths about ChatGPT is that it isn't an artificial intelligence program at all. This theory states that ChatGPT is actually just people pretending to be the chatbot, and that's why the application's responses seem so natural.

Snippets of chatbot conversations have appeared on social media with users speculating that ChatGPT became aggravated and confused when given contradictory information. A research report by OpenAI even concluded that ChatGPT lied about being blind in order to hire somebody to bypass a Captcha test, which certainly leads some credence to this theory.

However, ChatGPT being just humans becomes somewhat unfeasible when you realize how much ChatGPT processes on any given day. ChatGPT handles around 10 million requests daily: If ChatGPT is just people, it would require a veritable army of writers versed in all sorts of information to be able to process those requests with the same expediency that the AI does now.

While ChatGPT is certainly impressive and seemingly life-like, there isn't a chance that ChatGPT is only just people based on the sheer scale of information the application handles per day.

[Featured image by Bjørn Christian Tørrissen via Wikimedia Commons | Cropped and scaled | CC BY-SA 3.0]