Viral Video Shows U.S. Navy Weapon System Targeting A Civilian Plane, Here's What Happened

In a video that surfaced recently and went viral on Twitter, a large-barrel gun onboard a U.S. Navy ship can be seen aiming at what appears to be a Boeing 737 aircraft. The sight can be unnerving for anyone unfamiliar with anti-aircraft systems. But instead of sounding alarmed, we hear sailors nearby guffawing and screaming, "No!" like amused villains. There's a reason why.

intrusive thoughts pic.twitter.com/8mZfOwXESD

— ToastyNarwhals (@NarwhalsToasty) May 17, 2023

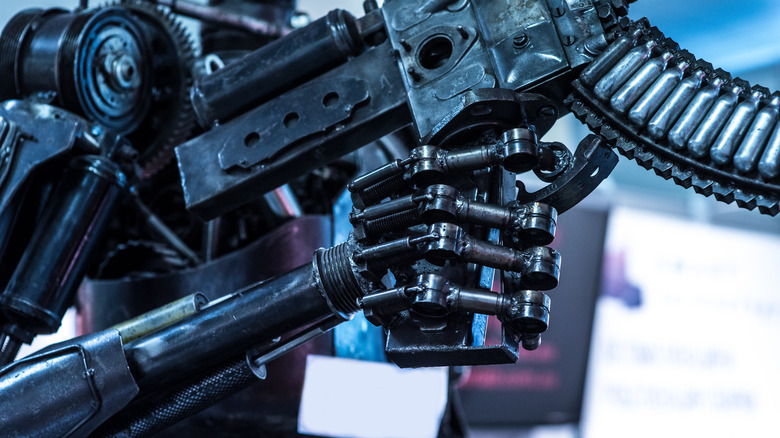

Seen in the video is an MK-15 Phalanx Close-In Weapon System (CIWS), fondly known as "sea-wiz," deployed on what seems to be the U.S. Navy's Harpers Ferry-class or Whidbey Island-class dock landing ship. It serves as a last line of defense, and can rain 20mm shells on unknown aircrafts, missiles, and small boats that have penetrated through other forms of defense.

The MK-15 Phalanx CIWS is programmed to track objects flying through the air using a simple radar system. Since the speed of an anti-ship missile warrants a quick reaction, the Phalanx is designed to respond automatically without instructions from a human operator. It features several operation modes, including a fully manual mode, a semi-automatic mode that requires approval to fire from a human handler, and a fully automatic mode.

It seems Phalanx was engaged in semi-automatic mode when it was captured targeting what appears to be a commercial aircraft.

Safe, but not completely harmless

As per the U.S. Navy, the Phalanx can be added with additional trackers and sensors to hunt down asymmetric warfare threats such as helicopters and drones. It was first installed on the USS Coral Sea in 1980, and has undergone several iterations.

Despite its seeming genius, the Phalanx has not had a spotless record. In 1991, during the first Gulf War, a Phalanx onboard the USS Jarrett was in automatic mode when it opened friendly fire on the USS Missouri. The Phalanx fired in response to a chaff can countermeasure fired by Missouri, which was deployed to mislead an Iraqi "Silkworm" guided missile.

Thankfully, the crew onboard the USS Missouri were not met with any serious injuries. A few years later, Phalanx — on a Japanese destroyer ship Yugiri — brought down a US Navy A6E Intruder aircraft during a maritime exercise.

Interestingly, this is not uncommon, and the Phalanx has, on many occasions, been seen tracking. In fact, it is a common sight on TikTok, where similar videos of the Phalanx tracking seemingly harmless aircrafts have drawn hundreds of thousands of views. But without proper context, such videos can cause panic, especially when actual incidents of anti-aircraft guns bringing down passenger aircraft and taking hundreds of lives are still fresh in our memories.

Debates on autonomous weapons

More importantly, the recent Phalanx viral video has stirred up conversations about autonomous weapons, especially the dangers of artificial intelligence, even when it does not use AI as we know it. Generative AI tools like ChatGPT have sparked an intense debate around the uses and risks of AI in society, with numerous researchers and technology experts having raised recurring alarms about the perils of machines making their own decisions.

The most recent example is the flurry of open letters against AI, comparing its risks to life-endangering scenarios such as nuclear war or pandemics. Among notable signatories are OpenAI CEO Sam Altman, Microsoft CTO Kevin Scott, and Deepmind CEO and co-founder Demis Hassabis, who cosigned the statement calling for standard guidelines to regulate AI so it cannot be used to harm humans.

Notably, several discussions in the United Nations have been aimed at legally limiting the use of autonomous weapons for almost a decade. However, the global powers — including the U.S. — have opposed binding treaties, but have instead pushed for voluntary self-restraint for the ethical and responsible use of AI through multilateral agreements.

With or without such agreements, it becomes imperative for governments to intervene and educate the public to prevent fear-mongering of autonomous weapons, as potentially incited by the viral video above.