3 Ways To Tell If An Image Was AI-Generated Or If It's The Real Deal

There's a lot of controversy surrounding AI-generated imagery. Such technology is as fascinating as it is frightening, and it may also be a harbinger of real issues for humanity. Internet users, through the likes of deepfakes, can spread misinformation that can be dangerous not only on a personal level but on an international or global one, too. Even innocent mistakes can lead to widespread misinformation.

For example, in March 2022, a conspiracy theory that Chris Rock had been wearing an eye pad emerged, supposedly proving the encounter between himself and Will Smith at the Oscars had been staged. The person who shared the original image said it had been upscaled to 8K, making it clear that the AI-powered upscaler had added details to Rock's face that weren't actually there.

Meanwhile, software like DALL-E 2 simply needs a few words to use as a prompt for generating an image. It's both very powerful and easily accessible. It's important, then, that we try to identify the differences between real and AI-generated imagery. As advanced as the technology has become, it seems that there are still certain detriments to AI-generated images, and you can often spot them by knowing what to look for.

Try A GAN Detector

There's no guaranteed way to always determine at a glance that an image has been AI-generated. Certain anomalies can be big giveaways, but they aren't foolproof. A human artist's image can be every bit as asymmetrical and illogical — and have just as questionable-looking hands — as one that AI has generated.

This isn't to say, though, that technology hasn't been created in turn to try and answer this question. AI images are created by means of Generative Adversarial Networks (GANs). In his Université de Montréal paper "Generative Adversarial Nets," Ian J. Goodfellow both introduced and explained the term. "The generative model can be thought of as analogous to a team of counterfeiters, trying to produce fake currency and use it without detection, while the discriminative model is analogous to the police, trying to detect the counterfeit currency," Goodfellow wrote. "Both teams [attempt] to improve their methods until the counterfeits are indistinguishable from the genuine articles."

A GAN detector, then, is a means of determining whether such a model (and, by extension, AI generation) has been used to create an image. Mayachitra offers a demo version of such a detector, but its results are not conclusive. Users can query the origin of a small selection of provided images or upload their own, but its results appear limited to "maybe GAN generated," "probably GAN generated" and "probably not GAN generated." Another option comes from Hive, which similarly offers a demo of its tool.

Try to recognize and detect common AI mistakes

Advanced Artificial Intelligence can understand a dizzying range of commands and produce a similarly enormous range of images. It can certainly be convincing. One thing it perhaps cannot do is replicate the snowflake-esque smaller elements that make everyone's appearance unique. As the AI takes the "same" approach to each command given, the slip-ups that occur in the resulting images are subtle yet common. Patterns can become quite easy to detect when you know what you're looking for.

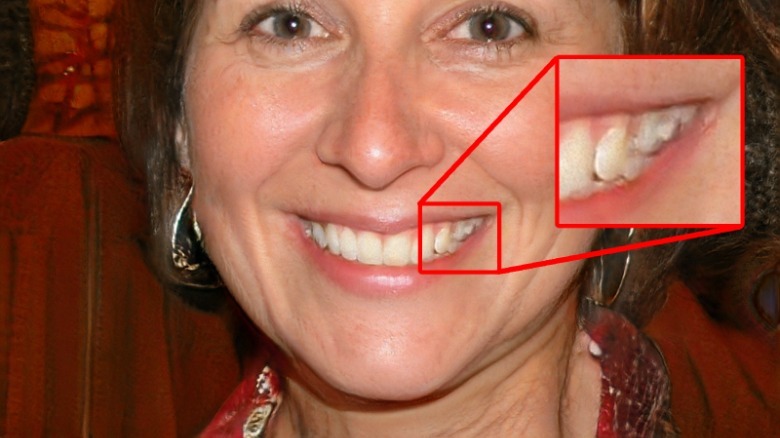

As Which Face Is Real? reports, creating AI-generated images of spectacle wearers can result in frames that don't match or that cut out partway across the face or the arm of the glasses. Symmetrical elements are difficult to replicate in general: patterns on clothing, buttons, and collars may not match from one side to the other. By the same token, unique formations such as exposed teeth (as seen in the AI-generated example image below) or the complex texture of hair strands can look noticeably fake, like an otherwise-excellent pencil drawing with an unfortunate smudge.

With GANs offering the capacity for dating websites to be populated by some people who aren't actually real, it's worth looking a little closer at that spectacle-wearer's profile picture.

Note aspects of the image that don't make sense

With a lot of AI-generated images being human faces (for website profile images, for instance), it's important to look beyond just said face. Even when they're generated seemingly flawlessly, AI can become so committed to getting this focal point correct that it neglects the background. It can become blurred or include odd patterns. Disparate prompts, such as winter snow and flowers, may also be included side by side. Colors can also bleed into the face from the foreground: the slate gray of a building can also appear in a patch of the subject's hair, for instance.

In December 2022, CQUniversity's Brendan Murphy explained AI's approach to generating images to ABC News: "a funny mixture of really getting it right and not always understanding it." The technology as it stands, then, can do an admirable job of incorporating the various smaller elements that make up a face or image, but determining whether they always fit together may be beyond its capacities. At least, for now.