Tech Inventions That Changed The Health Industry Forever

Medical technology seems like something that will be sterilized stainless steel and whirring centrifuges and miraculous machines that make us see and hear and feel better and longer than our parents imagined. But the reality of it is often more like a smear of penicillin in a petri dish that changes everything.

Inventiveness and luck alone aren't the secret sauce for changing the healthcare industry, either. These game-changers often improve aspects of treatment that aren't always part of the original goal. Innovative healthcare tech can be game-changing if it enhances accessibility of care, efficiency of care delivery in terms of the time involved, the accuracy with which diagnostics can be performed, the level of personalization technology brings to the table, and the cost-effectiveness of the endeavor, which is often enhanced by tech. You might not think of these factors as strictly healthcare pursuits, but they do improve and occasionally revolutionize healthcare outcomes

Of course, healthcare itself isn't always what improves and lengthens lives. That Aperion study credits sunscreen with saving twice as many lives as CPR, and synthetic fertilizers with saving more lives than just about anything else by simply enabling agriculture to produce more food. We will focus on the health industry here, but the biggest potential sometimes lies down other avenues.

Medical imaging: X-rays

Medical imaging is not, of course, a single technology, but an umbrella term for a bunch of different methods of getting a handle on what's going on within our bodies. One example might be a combination of these methods, like the Explorer total-body scanner, which performs both PET and CT scans. Without these advancements, we'd be devoting a lot more resources to palliative care. The tech in question includes x-rays, CT scans, MRIs, and ultrasounds, and each has changed the diagnostic landscape in its own way.

But x-rays themselves aren't just a diagnostic tool. They are used to guide surgeons, monitor the progress of therapies, and inform treatment strategies for the use of medical devices, cancer treatments, and blockages of various sorts. In 1896, the then-hyphenated New-York Times mocked Wilhelm Conrad Röntgen's medical application of X-ray imaging as an "alleged discovery of how to photograph the invisible." Five years later, Röntgen won the Nobel Prize in Physics. A century later, X-rays have replaced invasive surgeries and guesswork as a core diagnostic tool for doctors at every level.

Less a new imaging technology than a brilliant implementation of existing methods, computed tomography (CT) uses cross-sectional X-ray images acquired from various angles and computer algorithms to rapidly create a navigable, three-dimensional image of small or large parts of the body. Because it's based on X-rays, CT scans are better at imaging bones than soft tissues. CT scans provide many of the same benefits as other medical imaging methods, enhanced for many purposes by their speed and superior imaging of bones.

Medical imaging: computed tomography and sonography

A Nobel Prize was awarded in 1952 for work that would become the basis for magnetic resonance imaging (MRI), a technology that uses magnetic fields and non-ionizing radio frequency radiation to acquire stacked images ("slices") that offer superior soft-tissue contrast to other technologies. The ability to differentiate among many soft tissues and liquids, including distinguishing cancerous from non-cancerous cells, has been a boon to medical diagnostics.

Diagnostic medical sonography (ultrasound) is an imaging technique that can be employed to examine and monitor unborn children or regions of the body that might be more sensitive to radiation, such as the pelvic area. Ultrasound uses changes in high-frequency sound waves to image areas of the body without invasive surgeries or methods that require the use of radiation. Ultrasound devices range from the sort of large machines you'll find in hospitals to tiny modern devices like the Ultrasound Sticker. Ultrasound is a critically important diagnostic tool. Its reduced risk makes it practical for creating three-dimensional images, including 3D time-series visualizations that can show movement and changes in tissues over time.

Microscopy and the germ theory of disease

Almost no invention has had more of an impact on our understanding of (and ability to combat) disease than the microscope. This is true for a number of reasons, but the primary one is that it facilitated the germ theory of disease. Germ theory is the idea, commonplace today, that specific microorganisms are implicated in specific illnesses. Without germ theory, most would have continued to attribute disease to miasma theory, the longstanding notion that disease was caused by polluted air and was not transferred from person to person.

Of course, progress in healthcare and even in the treatment of specific diseases existed while miasma was the presumed culprit, and a lot of important work got done in areas related to sanitation and socioeconomic factors. But anything real that can be proven with a bad theory can be proven even more effectively with a more scientifically sound one, and germ theory established some important ideas in medical science. Obviously, any thoroughgoing treatment depends on accurately identifying a cause, which one could do armed with a microscope and germ theory. And the idea of person-to-person transmission, denied by miasma theory, gave meaning to preventive medicine. Prior to the early findings that led to germ theory's acceptance, the spread of contagions was often unhindered by doctors, and occasionally the doctors were a vector for spreading them.

And germ theory was just getting started. It made known the need for sterile surgical procedures and made known the identity of specific disease-causing microbes, enabling the development of targeted vaccines and other pharmaceuticals. The microscope, seeing more of a smaller area, has changed our views of almost everything.

Blood transfusion

Sometimes we develop brilliant technologies more or less by accident, or for entirely the wrong reasons (think leaches). Aperion estimates that blood transfusions have saved a billion lives, but its earliest practitioners weren't even clear about why it would sometimes work... or would fail disastrously. Fewer than 200 years elapsed between the discovery of blood circulation and the first successful human-to-human transfusion. This was executed by an American, the prophetically named Philip Syng Physick.

By 1867, Joseph Lister had applied his knowledge of germ theory to develop antiseptics for controlling infections acquired during transfusions. In fewer than another 100 years blood banks had become common and fractionation into albumin, gamma globulin, and fibrinogen made possible new plasma-derived hemotherapies. Today, there are at least 23 therapeutic plasma products. Meanwhile, blood typing was discovered and refined, and transfusion as a medical therapy was common by the start of World War II.

The history of blood-related therapies is a stroll through our woefully incomplete understanding of the human body. Around the time Philip Syng Physick was transfusing blood on the sly, George Washington was being killed by the practice of bloodletting, a 3000-year-old practice that made little sense but was so broadly accepted that it was used on a former president without misgiving. Bloodletting was thought to help keep the body's four humors (blood, phlegm, yellow bile, and black bile) in balance to prevent or mitigate disease. Aside from a few specific applications of leeches, humoral medicine didn't contribute a lot to modern medicine. It did, at least, acknowledge that illness had naturalistic (rather than supernatural) causes, and in that narrow sense paved the way for science to turn secret experimental transfusion into a billion saved lives in two centuries.

Gene sequencing

Faith Lagay wrote in the AMA Journal of Ethics that the "history of medical science is the story of discovering ever more localized cause of illness," just as germ theory narrowed the focus from environmental factors to specific microbes. The understanding of genetics, and the application of that understanding, zoomed down to the molecular level. In time, genomics would upend medical diagnostics so thoroughly that earlier methods now seem like throwing a dart from 6000 miles away. Gene sequencing revolutionized precision in medicine and changed everything from prenatal diagnostics to tracking a world of information within the Cancer Genome Atlas (TCGA).

Germ theory caused medicine to hone in on disease and individuals, by focusing on individual genomics has managed the trick of narrowing our view (to genes) but broadening our vision to cures. Human genome sequencing — a process by which human genes were mapped to their physical and functional outcomes — was first "completed" in 2003.

Today there are myriad important applications of the human gene sequence. We can better predict many diseases and disorders for certain individuals, and we better understand the effects. We evaluate the likely impact of substances on individuals (pharmacogenomics/toxicogenomics), and we study the interactions of different species' genomes in metagenomics. We create new fuels, solve crimes, and improve crops, all via genomics. We have cataloged the genetics and mutations of more than 20,000 samples of 33 cancers, leading to better treatments and preventive efforts, and conceivably leading to cures in the foreseeable future.

Where germ theory pushed us away from environmental circumstances contributing (but not incidental) to disease, genomics has pulled us back toward an approach to medicine scaled beyond the individual treatment room.

Anesthesia

You might not even think of anesthesia as a technology. It is, in fact, a handful of technologies that don't do much on their own except help with other things. And that makes anesthesia a candidate for the most important healthcare tech of all — because without its four forms (general anesthesia, twilight consciousness, local anesthesia, and regional anesthesia), many common (and life-saving) medical procedures would be impractical or impossible altogether.

The importance of anesthesia in modern surgery is highlighted by the fact that, in many cases, the anesthesia presents more risk to the patient than the surgery itself. Without it, of course, the surgery is out of the question, in most cases. The first modern general anesthesia was an application of diethyl ether by Dr. Crawford W. Long in 1841. Sources and authorities differ. You'll see 1842 as the date, or perhaps William Morton (1846) or Henry Hickman (1824) as the pioneer who first used ether as a surgical anesthesia. Whoever is to be credited, they saved a lot of lives and prevented an unbelievable amount of pain and suffering in the years since.

Of course, general anesthesia isn't the only form. Local anesthesia in various forms had always been mostly ineffective until the first use of coca leaves in the mid-1800s, starting a revolution in local anesthetics that lasted until the 1970s. It began with the synthesis of cocaine for use in blocking localized pain, but the cardiac toxicity and potential for addiction prompted the derivation of new amino ester and amino amide local anesthetics such as tropocaine, benzocaine, procaine, and lidocaine.

All anesthesia has improved dramatically, even in recent decades. According to Anesthesiologist Christopher Troianos, MD, speaking with the Cleveland Clinic, there is currently about a 1 in 200,000 chance of anesthesia-induced death during surgical general anesthesia.

Vaccines

Edward Jenner's smallpox vaccine (technically a cowpox vaccine that was effective against smallpox) lent its name to the current usage of "vaccine" to apply to all immunizations. Here we are extending that generalization to apply the term to modern variations with little in common with early smallpox, rabies, and other vaccines. These newer variants include mRNA, Viral Vector, and Protein Subunit immunizations used against COVID-19, all referred to as vaccines even though they do not contain the virus itself. And some are not necessarily new strategies. The first protein subunit vaccine (against hepatitis B) was approved over 30 years ago.

Vaccines are among the five technologies in Aperion's list of medical inventions that have saved over one billion lives, which was accomplished in the past 200 years or so. That represents 4-5 times as many lives as were lost in all the wars and conflicts of the 20th century.

Computerization

It would be difficult to overstate the importance of digital medical technology (that is, of computers in many forms) in conquering the information bottlenecks and insufficiencies that long hampered medical advancements. Attention first turned to the potential medical uses of computers in the 1960s. As computers' capabilities were expanded and interfaces were refined in the 1980s and beyond, the original dreams and many more slowly began to be realized. But the slowness of this adoption was painful, as noted in such reports as the President's Information Technology Advisory Council (PITAC) reports beginning in 1991. Security and interoperability issues and the lack of standards for electronic prescriptions, imaging, messaging, and reporting are gradually being tamed and the healthcare industry is reaping rewards in the form of better communications, improved medication monitoring, a higher quality of care, reduction of errors, and more protection of privacy.

What the patient sees of this information technology — such as digital charts/medical records — and telehealth/mHealth practices function well enough to handle the enormous complexity of the industry's underlying systems and their integrations with one another. The industry has famously clung to outdated fax technology because of I.T. failings in interoperability and communications.

For all of those challenges, though, it's difficult to imagine a modern hospital functioning without the computer tech commonly used for readily available patient records, order entry, billing, and much more. And even with those in place, there's likely to be room for improvement and growth. A Johns Hopkins study published in BMJ (formerly British Medical Journal) posits that medical errors are the third leading cause of death in the U.S. — a situation ripe for I.T. solutions.

NeuroRestore's neurological implantation and other potential game changers

The future of medicine lies mostly in the imaginations of people who have not been born yet, and who might not have been born at all but for the imaginations of earlier generations. But we can guess some things about the technologies that will make a dramatic impact in the near future.

Artificial intelligence algorithms related to processing and interpreting medical images are already outperforming doctors in terms of time and accuracy. But the potential for AI use in healthcare is enormous. Workflow refinements, error reduction, and enabling patients to meaningfully participate in their own care are all key areas in which AI can re-revolutionize parts of an industry already revitalized by information technology.

Similarly, we're already seeing inroads into the health industry's use of augmented reality, with bigger gains expected in the next decade in AR-assisted robotic surgery, wound care, physical therapy/rehabilitation, and many other areas. Immersive medical training can be conducted via AR-enhanced simulations, and virtual surgeries will help surgeons prepare for and better execute the real thing when the time comes.

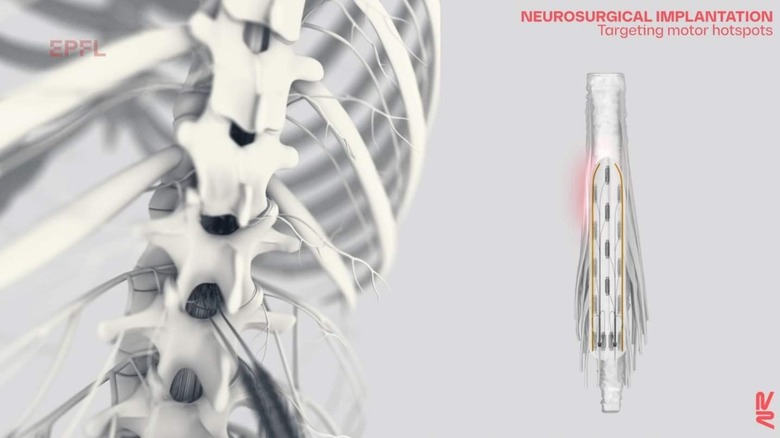

Other promising areas include stem cell therapies, targeted cancer therapies, and neural implants. NeuroRestore's implant technology, currently in development, uses AI to assist people with debilitating neurologic traumas. Three patients with complete spinal cord injuries have been studied while using NeuroRestore's technology — each of them showed remarkable progress during the first day, including standing and walking. After four months, one of the three was able to stand for two hours and walk one kilometer without stopping. These are the sorts of technologies that will put entirely new parameters on future lists of important health industry tech.