The Terrifying Truth About The Evolution Of Deep Fakes

In 1917, not long after the introduction of personal cameras, the world was rocked by the publication of photographs purported to show young children frolicking with fairies. The Cottingley Fairies, as they came to be known, were deemed legitimate by a number of experts and were popularized by none other than Arthur Conan Doyle. Decades later, the children in the photos, now adults, admitted they were fakes made with paper cutouts. Whether for art, fraud, or a fanciful prank that got out of hand, we've been doctoring images to paint a false picture of reality for almost as long as we've been able.

Our ability and tendency to create imagery from our imagination has lent itself to thriving television and movie industries that have utilized practical effects, camera trickery, and post-production modifications. As our imaging technologies became increasingly digital, that opened up a new arena for falsifying images and those tools are increasingly available to the wider public. As the ability to create false images propagated, society was bound for a little heartache.

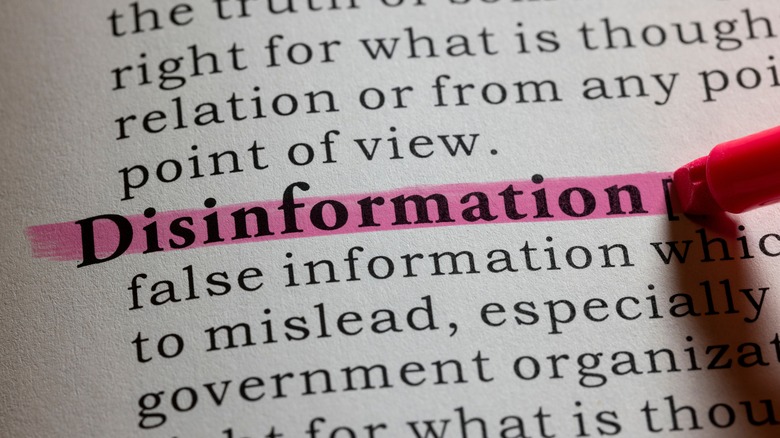

Now, through the use of sophisticated yet readily available software, nearly anyone with the time and talent can create fabricated images or videos of almost anyone else. This has some concerned that someday soon — maybe even already — we won't be able to trust what we see and hear online.

Defining terms, what is a deep fake?

The term "deep fake" is becoming a sort of catchall for any manipulated imagery, but that's not quite right. There are a couple of criteria needed in order for something to be a deep fake, it has to be video and there must have been an AI involved. The "deep" in "deep fake" refers to deep learning algorithms which are involved in the manufacture of modified video.

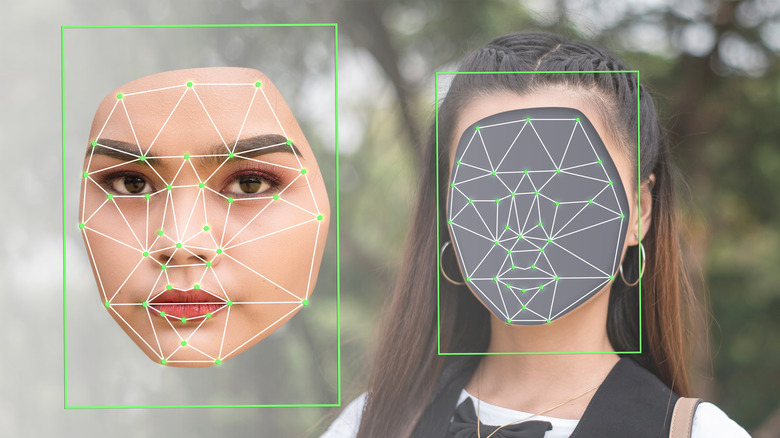

In essence, it refers to any video that has utilized artificial intelligence (sometimes more than one) to change or generate convincing visuals that never happened (via Business Insider). One could hypothetically train an AI to recognize chairs and replace them with beanbags. One is only limited by their imagination, desire, and the ability of their algorithms. While the implications are wide-ranging, the technology is most often used for faces and is generally targeted toward well-known individuals like celebrities or politicians.

From a certain point of view, many of us interact with and create simple deep fakes every day. As explained by Forbes, the filters on your Snapchat or TikTok utilize some of the same facial recognition and artificial intelligence to turn you into a dog or add freckles and makeup to your face.

The first GAN (Generative Adversarial Network)

There are all kinds of artificial intelligence, each one specialized for its particular type of work. Some are good at recognizing speech and returning relevant responses. Others are good at recognizing the environment and navigating through it. These kinds of AI lean on existing human knowledge or creation to replicate the behavior of people to a reasonable degree. The next obvious step was creating AI capable of creating new things.

In 2014, Ian Goodfellow and co-authors proposed a new framework for training generative AI. Their system, called Generative Adversarial Networks, or GAN, was published on the Arxiv preprint server. The premise of their neural network is relatively intuitive. It works by pitting two computer models against one another. The first model generates whatever you tell it to. It could be letters and numbers, or it could be human faces. The second model decides whether it believes the image generated is unique or if it is a real image taken from the training data.

If the discriminating model succeeds in identifying the generating model's creation, then the generating model goes back to the drawing board. It iterates and tries again. The adversarial nature of the paired computer models causes a creative arms race, constantly driving the generative half toward more convincing forgeries. The ability to train on real images and then generate convincing facsimiles would prove invaluable as the deep fake emerged.

De-aging and face swapping in movies

Around the time Goodfellow was inventing the first GANs, the folks at Lucasfilm were hard at work on the first "Star Wars" movie not directly related to the Skywalkers. When "Rogue One" hit theaters, fans were re-introduced to two well-known characters from the franchise's earliest installment. Both Grand Moff Tarkin and Princess Leia make an on-screen return, but neither of the original actors — Peter Cushing and Carrie Fisher respectively — were able to reprise their roles.

Instead, filmmakers relied on live performances from other actors and post-production movie magic to make it appear as though a young Peter Cushing or Carrie Fisher were in front of the camera (via SlashFilm). The process began by choosing the right actor, someone with similar facial features and an ability to deliver lines in the right cadence.

From there, filmmakers utilized motion capture technology, which takes advantage of dots on the face to make its movements and replace them after the fact. Finally, filmmakers use the data from the motion capture and special effects to map the desired face onto the performance. While the concept of deep fakes hadn't yet entered the public consciousness when "Rogue One" came out, it gave many viewers their first peek at the technological shift on the horizon.

Origin of the term deep fake

In modern parlance, the term "deep fake" has taken on a life of its own. Many of us use the term without ever considering where it came from. To that question, there are really two answers. The first is simple and etymological. The name refers to a portmanteau, taking "deep" from the deep learning algorithms involved and "fake" for the obvious reason (via Grammarist). Historically, it has another origin.

In 2017, a Reddit user with the handle Deepfakes started uploading modified pornographic videos ostensibly featuring well-known celebrities. It was perhaps a natural and even expected outgrowth of the established practice of photoshopping celebrity faces onto still pornographic images but that doesn't mean anyone was prepared for it.

According to Vice, Deepfakes creations were a hit in certain subreddits and the resulting media attention helped to propel the notion of deep fakes into the public eye. In a conversation with Vice, Deepfakes noted that they used publicly available images found on Google, stock photo repositories, and YouTube videos. They then trained the AI on the target media, in this case, pornographic videos and images of the chosen celebrity. According to Deepfakes, the system worked by recognizing the target face and treating other images as distortions of that face. Once the algorithm had a good handle on the desired components, it was able to map the face of a celebrity onto the body of an adult film star, to everyone's amazement and horror.

Adobe lets you say anything

In 2016, during a press event, Adobe announced new software called VoCo which promised to do for audio what Photoshop did for still images (via Vice). The software only needs about 20 minutes worth of sample audio in order to build a model of a person's voice. Public figures have countless hours of audio from speeches, interviews, and on-screen performances, not to mention radio and podcasts, for anyone to stitch together enough sample audio. In fact, many of us have put more than 20 minutes worth of our own voices online, making all of us potential targets.

Then, using that small amount of training audio, the user can generate new audio, spoken in the target voice, just by typing what you want it to say. In the demonstration, Adobe's Zeyu Jin takes a piece of audio from Keegan Michael Key speaking about an award. Jin then reorders or replaces certain words. Finally, Jin cuts out a section of the sentence and replaces it with entirely new words.

The result is an audio file of a public figure saying words they never said. Fortunately, Adobe thought ahead about watermarking the files or making them otherwise identifiable.

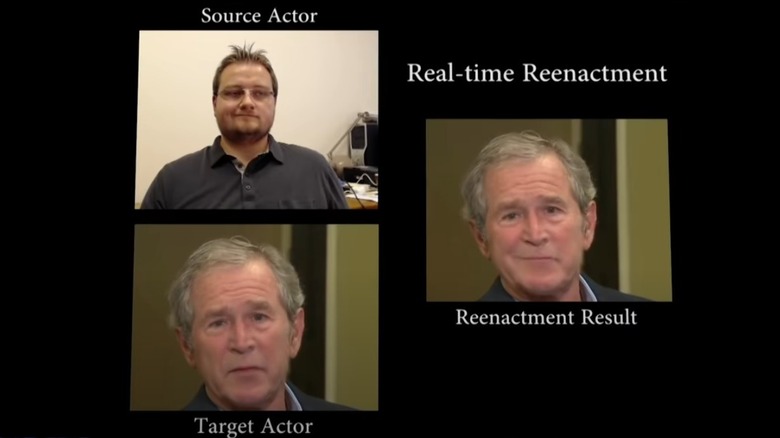

Face2Face

In December of 2018, researchers from Germany and the United States revealed Face2Face in a paper published in the journal Communications of the ACM. In it, they describe and demonstrate a process which uses facial capture to modify the expression and mouth movements of a video.

Face2Face uses two video streams to achieve its effect. The first is the target video you want to change, the second is a video of you or an actor acting out the changes you want. According to the authors, the target video can be taken from any public source, like YouTube, and the second video can be captured using a webcam.

Once you have your camera on, the system tracks your facial movements and maps them onto the target video through deformation transfer. If the actor does a decent impression of the target's voice, that's all you need, but you don't even really need that. Coupled with Adobe's VoCo, creators can create audio of a person saying whatever they want, then match it up with a faked Face2Face video, allowing you to control the movements and speech of someone else like a puppet.

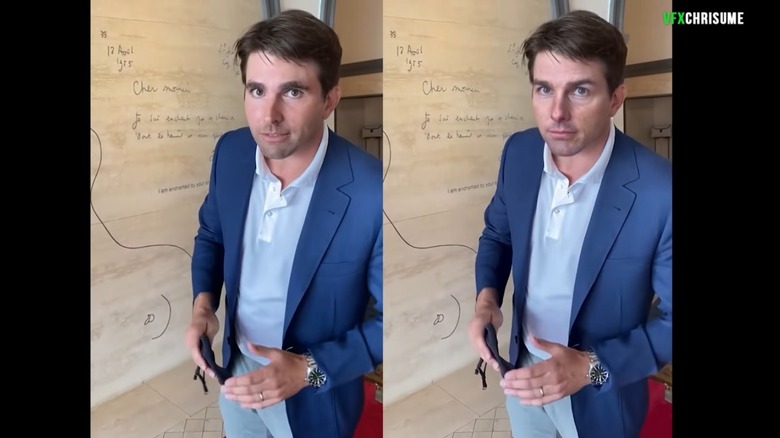

DeepFaceLive

For the most part, until very recently creating deep fakes has involved taking existing video and manipulating it through the use of training video and artificial intelligence. In an interview with Today, the viral TikTok creators behind deeptomcruise stated that it used to take them a week or more to make a clip for TikTok. A year later, it takes only days. As the technology advances, the process of creating deep fakes will only become faster and more accessible, as evidenced by the release of DeepFaceLive.

It's an open-source tool released on Github in 2021 and created by Russian developer Iperov, who has been involved in other deepfake tools. According to the Daily Dot, it utilizes the same training video datasets as previously existing deepfake tools to gather the likenesses of public figures, but it works in real time.

The technology is similar to filters you may be familiar with from Snapchat or TikTok, except instead of turning you into a dog, it can turn you into a celebrity. The tool is also compatible with common video streaming software like Zoom or Skype, potentially allowing users to pose as other people during live video calls.

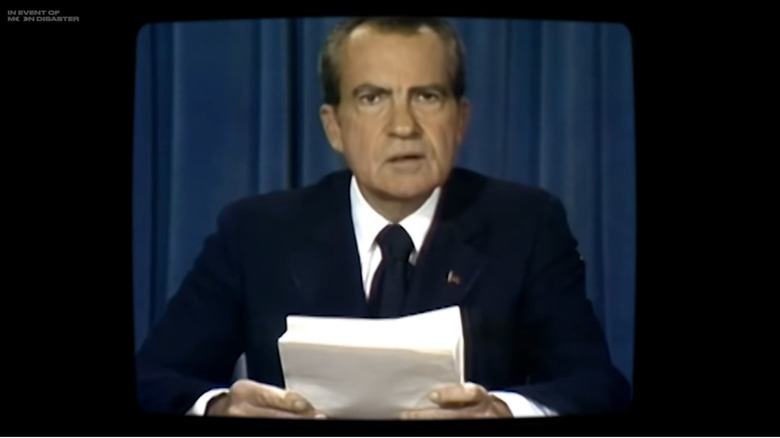

In Event of Moon Disaster

There are obvious concerns about how these technologies might be used in concert — not to mention how they're already being used — to create wholly fictional yet totally convincing videos. However, there are also educational and entertainment applications which could utilize these technologies in a less morally polluted way.

To see how that's being done, we'll have to go back in our minds to the peak of the Apollo program. In 1969, as Neil Armstrong, Buzz Aldrin, and Michael Collins made the perilous journey to the Moon, the United States political leaders were preparing for two potential eventualities. In one hand, then President Nixon carried the speech we all heard, one which told of a successful mission to the Moon. Though there was another speech, one which informed the world of failure and tragedy far from home (via MIT).

The folks behind "In Event of Moon Disaster" wanted to illustrate how the world might have looked had Apollo XI ended in tragedy, while also blatantly telling the viewer they're watching something fictional. This eight-minute short film takes viewers into an alternate history through the power of deepfakes.

To pull it off, an actor read the speech Nixon never gave, in order to get the right cadence. Then video and audio manipulation were used in concert such that Nixon appears to be giving the speech, despite half a century having passed.

Doubling every six months

Not so long ago, deep fakes were more interesting than they were concerning. While their future potential was scary, it was the kind of scary you get from watching a horror movie. It's fun to consider when you know the slasher won't come knocking on your door. That might be poised to change.

As the tools get better at what they do and they become more widely available, the sheer number of deep fakes is likely to explode. In fact, according to Discover Magazine, reports put out by Sensity, a company which has been tracking the spread of deep fakes since 2018, show that the total number of deep fakes being created is doubling roughly every six months.

While some of these — like the aforementioned deeptomcruise videos — are fun and probably harmless, a vast majority of them haven't diverged too far from deep fake's roots. According to Sensity, roughly 96% of the deepfakes created are for pornography.

The speed at which deep fakes are evolving and propagating significantly exceeds growth in other technological arenas, where things double roughly every two years (via Our World in Data). Before long, it might become hard to discern what's real and what isn't.

Things aren't as bad as they seem

For now, the risk of becoming the subject of a deep fake is largely isolated to political figures and celebrities. While there is some risk to the degradation of our ability to trust what we see and hear, it's unlikely that the average person will become the victim of a malicious deep fake. Whatever this says about our species, the overwhelming majority of deep fake content remains pornographic in nature, which also limits the scope of potential targets. However, given the growth of deep fakes, eventually that scope may widen.

Thankfully, all is not lost. While creators are pushing the boundaries of what the technology can do, researchers are advancing new detection methods. Until recently, the leading detection technologies could successfully identify a deep fake about 65% of the time according to a study in the Proceedings of the National Academy of Sciences. Under certain circumstances, people perform a little better. New research may close the gap.

Researchers at UC Riverside developed a method capable of detecting deep fakes with approximately 99% accuracy. It's capable of detecting not only when an entire face has been changed but also when the expressions have been manipulated. It can even identify which portions of the face have been altered. Of course, you can only detect fakes if you know to look for them and have the right tools. We'll all need to remain vigilant as deep fakes become an increasing part of our lives.