The Irony: Microsoft Makes Depth-Tracking Phone While Ignoring Kinect

Microsoft may have conspicuously ignored Kinect in its Gamescon event today, going as far as to leave the motion sensor out of all three of its new Xbox One bundles, but that doesn't mean the rest of the company is giving up on clever camera tech. Microsoft Research has been working on turning a regular smartphone into a depth-camera, delivering Kinect and Google Project Tango style scanning and tracking but with a fraction of the complexity.

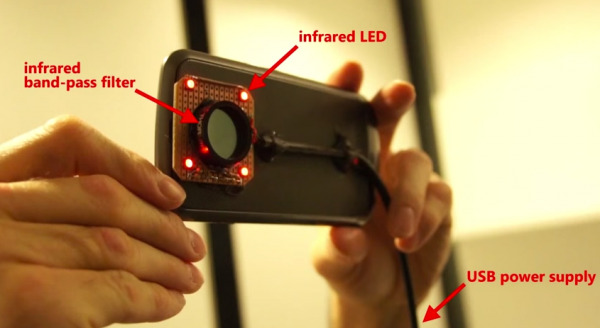

First, the ten person team removed the IR cut filter from the camera of a Galaxy Nexus, and then implemented an IR bandpass filter to make it sensitive only to a certain range of light. An LED light ring consisting of six IR LEDs was fitted around the lens, giving out a diffuse illumination invisible to the human eye (while also sipping just 35mW).

From that point it's a case of some clever computational work – hybrid classification-regression forests, in fact – to figure out how close an object like a hand or face is based not only on its own intensity in the near-IR range, but the comparative intensity of the pixels around it.

With some initial training, the Microsoft Research team says, the camera can generalize surprisingly well over a range of objects and users. With a combination of depth and IR data, even individual hand-parts can be differentiated, such as the different joints of fingers.

As the researchers point out, there are already several cameras and sensors that can do depth-sensing on mobile devices; the initial Project Tango tablet, for instance, uses a range of different modules. However, their "need for custom sensors, high-power illumination, complex electronics, and other physical constraints will often limit scenarios of use, particularly when compared to regular cameras," they argue.

In contrast, this IR system could be retroactively added to an existing sensor, though there's the downside that the current modifications then limit the camera from capturing visible light images. Using an RGBI sensor, as offered by CMOS vendors like OmniVision and Aptina, would be a workaround to seeing both IR and visible light, though that of course wouldn't be retroactively upgradable.

The technology isn't just applicable to smartphone cameras, of course, and the Microsoft Research team also demonstrates how a regular USB webcam can be converted to a depth-sensing camera in ten minutes. Though the sensitivity and accuracy isn't on a par with commodity sensors tailored to such a purpose, the low cost of modification does open new doors: Google's Project Tango slate, for instance, is a not-inconsiderable $1,024.

3D tracking seems to be having a tumultuous time within Microsoft at the moment. On the one hand, there are projects like this from Microsoft Research and ambitions to put Kinect into smart homes and smartphones; on the other, Microsoft is believed to have axed its 3D Windows Phone flagship deeming the technology not yet ready for primetime. Meanwhile, Kinect has gone from a mandatory part of the Xbox One experience to something of an afterthought.

Microsoft Research will present the depth-sensing hack at SIGGRAPH 2014 this month. The company is also taking a clever computational video processing system dubbed Hyperlapse, which can make smooth clips out of jerky body-worn camera footage.

SOURCE Microsoft Research