New AI Think-Tank Hopes To Get Real On Existential Risk

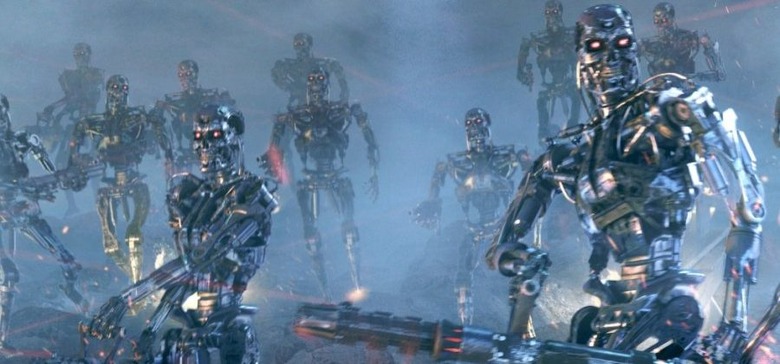

A future where humanity is subjugated by AIs, hunted down by robots, or consumed by nanobot goo may be the stuff of today's sci-fi, but it should also be on the curriculum according to Cambridge University experts. A tech-minded trio – made up of a scientist, a philosopher, and a software engineer – has proposed a think-tank dedicated to so-called "extinction level" threats of our own creation; the proposed Centre for the Study of Existential Risk (CSER) would examine the potential perils involved in today's cutting-edge research.

Those risks could be from computer intelligences getting out of hand and out-thinking their human programmers, or autonomous self-replicating machines that consume voraciously in their drive to reproduce. "At some point, this century or next, we may well be facing one of the major shifts in human history – perhaps even cosmic history – when intelligence escapes the constraints of biology" philosopher and CSER co-founder Huw Price argues.

"Nature didn't anticipate us, and we in our turn shouldn't take AGI [artificial general intelligence] for granted" Price suggests. "We need to take seriously the possibility that there might be a 'Pandora's box' moment with AGI that, if missed, could be disastrous."

Price – along with Skype co-founder and software engineer Jaan Tallinn, and Lord Martin Rees – propose the CSER as a way to pre-empt or partially prepare for humankind no longer being so in control of its ecosystem. "Take gorillas for example" Price says, "the reason they are going extinct is not because humans are actively hostile towards them, but because we control the environments in ways that suit us, but are detrimental to their survival."

The CSER has already gathered a shortlist of science, law, risk, computing, and policy experts to contribute as advisors to the new department, with an emphasis on shifting perception of AI-style outcomes from easily-dismissed sci-fi to legitimate sociological study. The trio of co-founders say they will be producing a prospectus for the Center in the coming months.

"With so much at stake," Price concludes, "we need to do a better job of understanding the risks of potentially catastrophic technologies.