Jigsaw's Tune Chrome Extension Filters Out Toxic Comments

The Internet is something of an equal opportunity tool that empowers both the good and the bad. The anonymity it provides has encouraged frightened voices to speak up in opposition to oppressive powers but it has also emboldened less conscientious actors to say things they would never say face-to-face. That's why Alphabet's Jigsaw is working on an experimental Tune Chrome extension that lets users tune out those toxic comments for some temporary peace of mind.

You've probably barely heard of Jigsaw. It's one of those Alphabet units that works silently in the hopes of making the Internet safer using the same technologies Google uses to power its services and devices. It has already launched quite a few experiments, like Intra for protecting against DNS manipulation and censorship and Perspective for spotting abusive language on web pages.

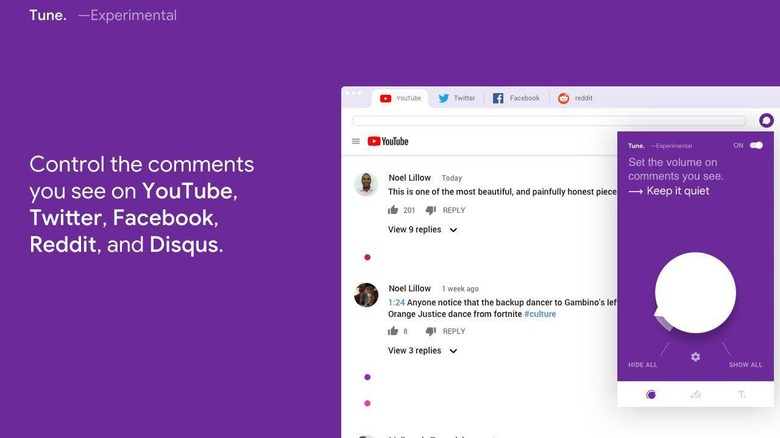

Tune springs up from that last one and leverages the machine learning that identifies abusive language in comments. But more than just identifying them, Tune will actually let you hide them from sight.

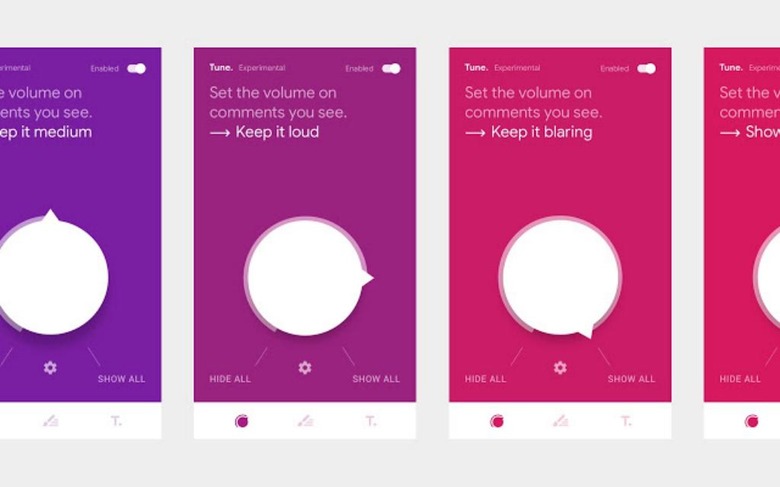

The Chrome extension lets you set a level on how much toxicity you want to hide or see, on the assumption you might not want to hide them all, at least not forever. It simply makes those comments invisible but you still have a visual cue that something exists there. Whether that will nag you to unhide them is, at least, your decision to make.

Like Jigsaw's other apps, Tune is still marked as experimental and works only on YouTube, Facebook, Twitter, Reddit, and Disqus. Jigsaw admits that it isn't perfect yet and might make errors and even false positives. The point, however, isn't to be a final solution for the problem of toxicity but as a launching pad for developing other machine learning tools.