Google Glass Controls And Artificial Intelligence Detailed

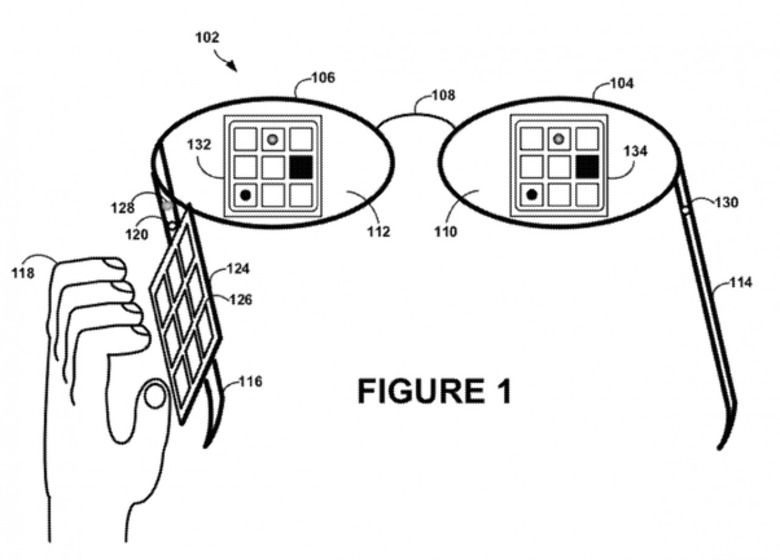

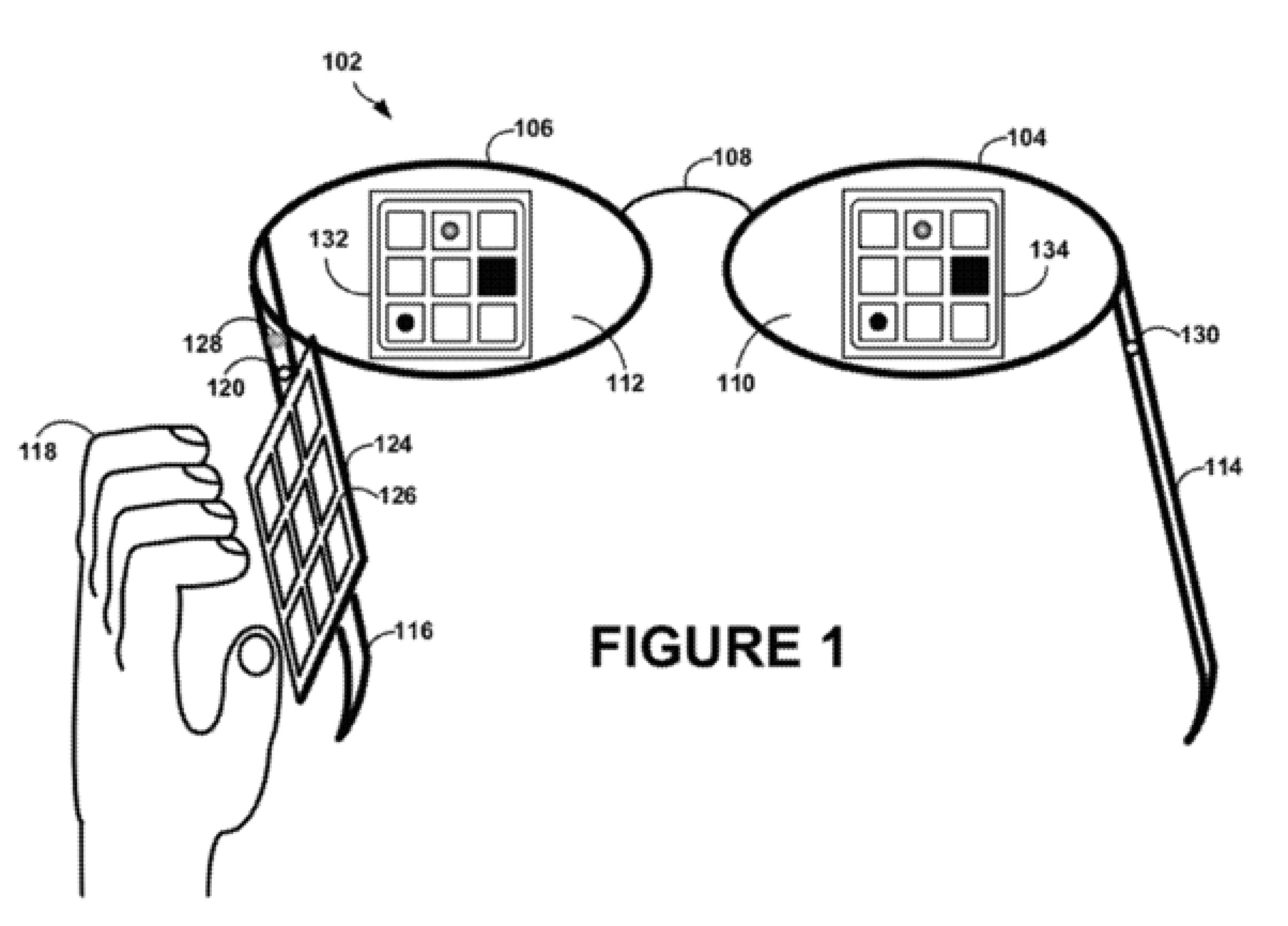

Google's cautious approach to allowing people to play with Project Glass means the UI of the wearable computer is something of a mystery, but a new patent application could spill some of the secrets. The wordy "Head-mounted display that displays a visual representation of physical interaction with an input interface located outside of the field of view" details a system whereby a preview of the controls of a wearable – such as the side-mounted touchpad on Google Glass – are floated virtually in the user's line of sight. The application also suggests Glass might maintain its own "self-awareness" of the environment, reacting as appropriate without instruction from the user.

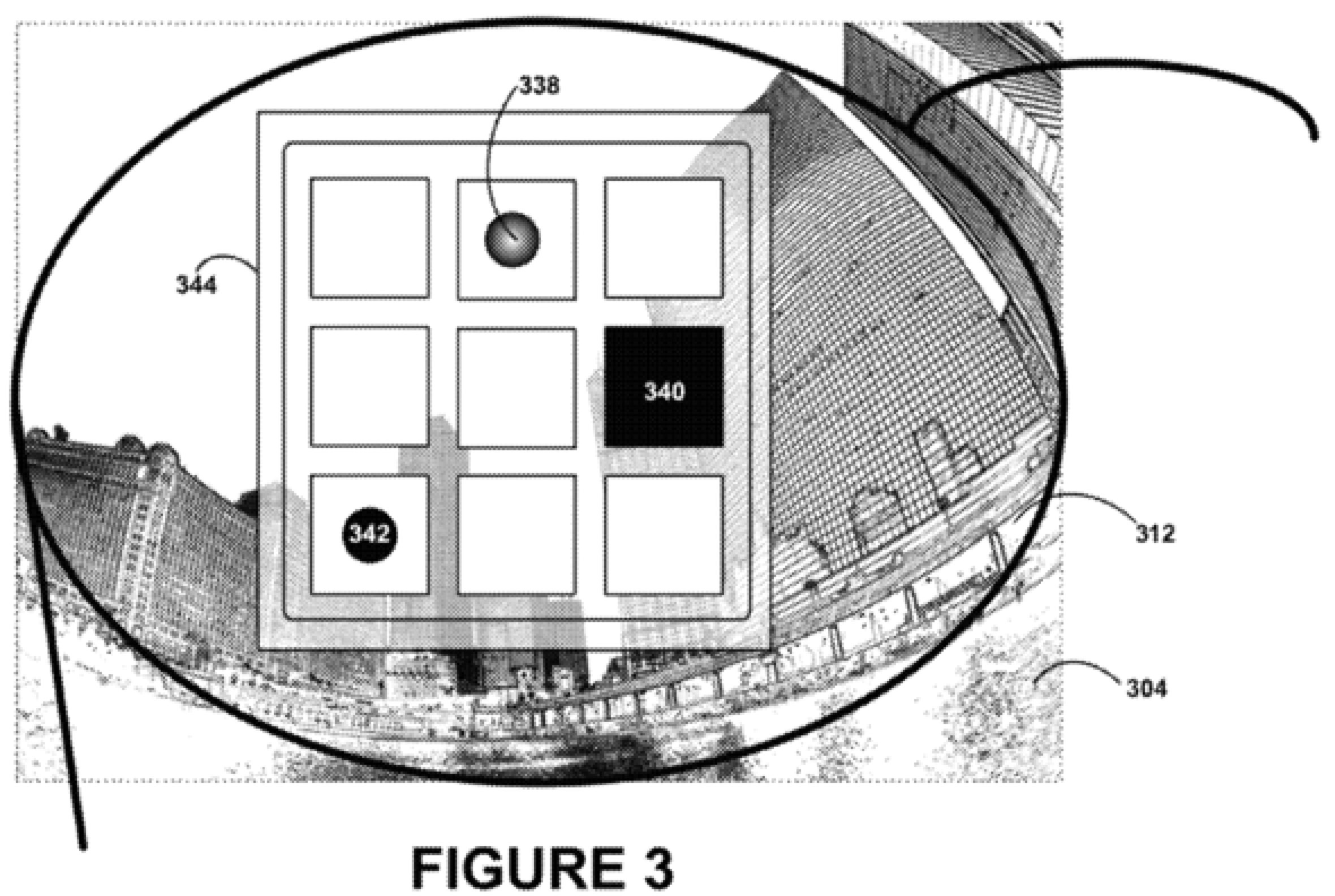

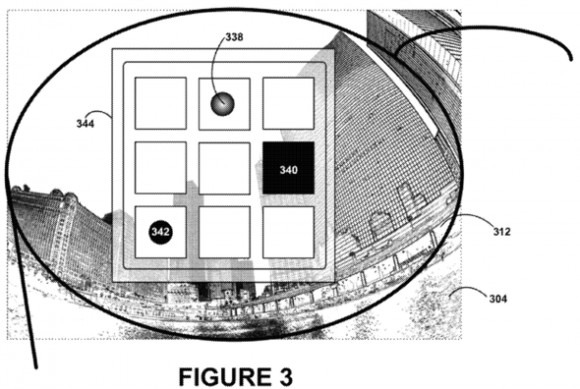

In Google's patent application images, the side control is a 3x3 grid of buttons – which could be physical or virtual, with a single touchpad zoned to mimic nine keys – and the corresponding image is projected into the eyepiece. Different visual indicators could be used, Google suggests – different colored dots or various shapes – and the touchpad could track proximity of fingers rather than solely touch, with different indicators (or shades/brightness of colors) used to differentiate between proximity and actual contact.

"As the wearer's hand gets closer to a pad the touch pad, a dimmed colored dot 338 [in diagram below]may become responsively brighter as shown by the colored dot 342. When the touch pad recognizes a contact with pad 340, the pad 340 may light up entirely to indicate to the wearer the pad 340 has been pressed" Google

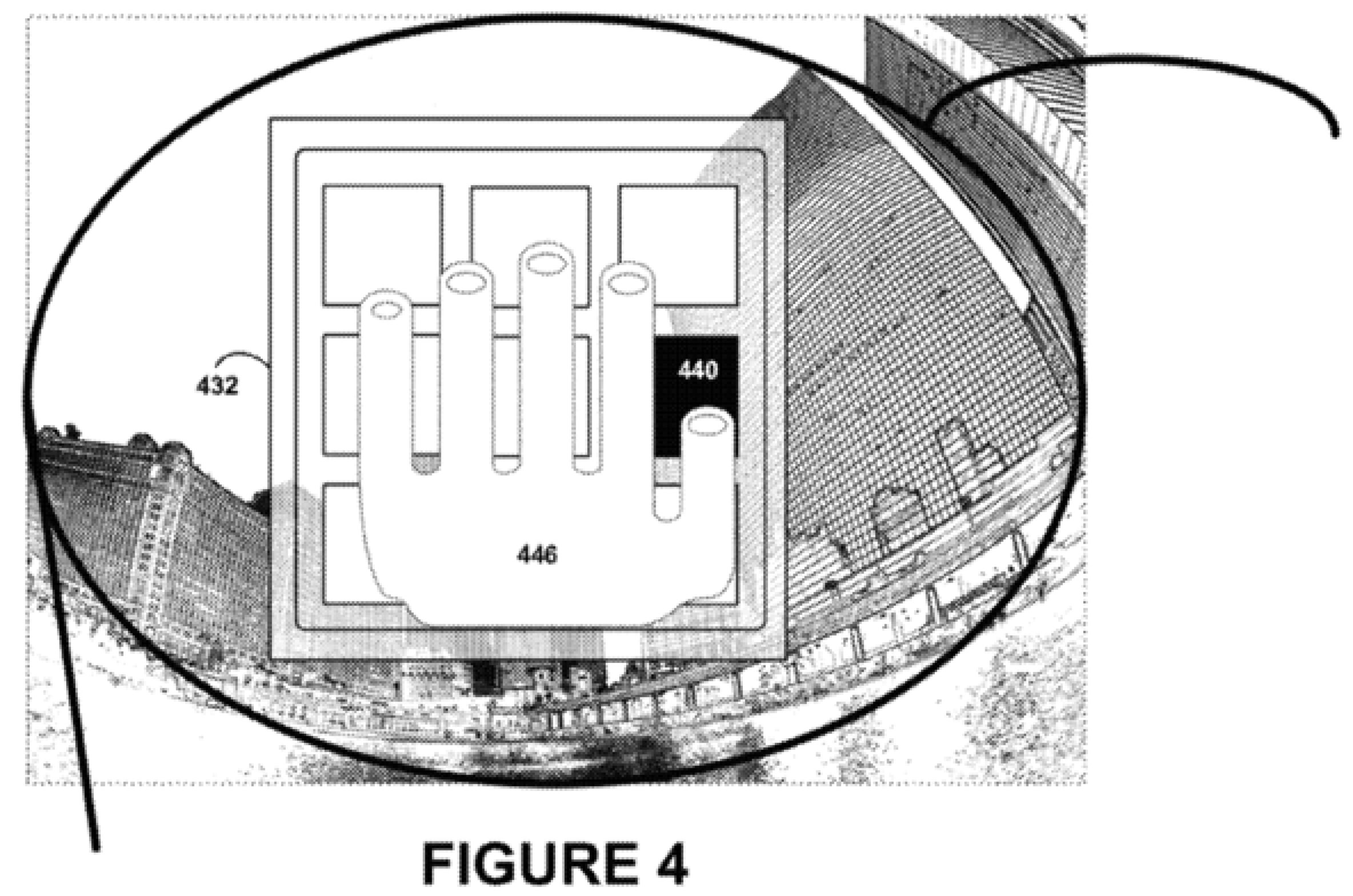

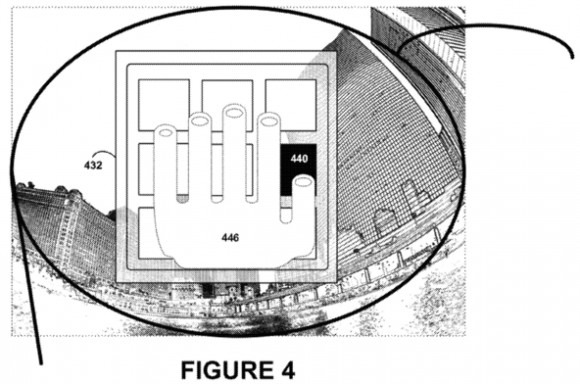

However, that's only a basic interpretation of Google's ideas. The company also suggests that a more realistic use of virtual graphics could float a replica of the user's hand – even mimicking physical characteristics such as their actual fingernails, wrinkles and hairs, which Google reckons would make it more believable – in such a way that the control pad feels like it's actually in front of them.

"This feedback may "trick" the wearer's brain into thinking that [the] touch pad is directly in front of the wearer as opposed to being outside of their field of view. Providing the closest resemblance of the wearer's hand, the virtual mirror box may provide a superior degree of visual feedback for the wearer to more readily orient their hand to interact with touch pad" Google

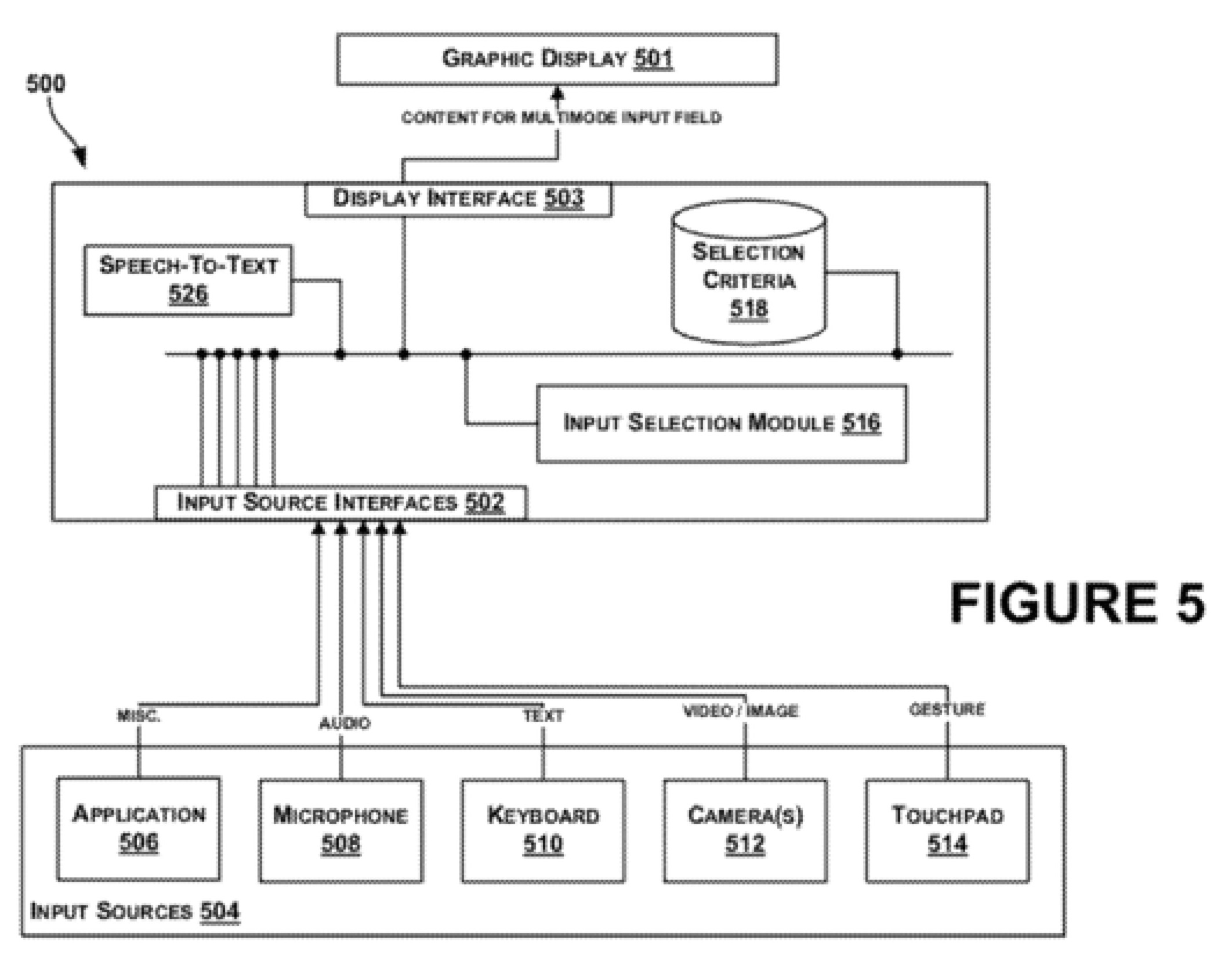

Button control isn't the only strategy Google has for interacting with Glass – there's the possibility of speech, input from the camera, and a wireless keyboard of some sort, among other things – but the headset would be able to prioritize UI elements depending on context and/or wearer preference. "In the absence of an explicit instruction to display certain content," the patent application suggests, "the exemplary system may intelligently and automatically determine content for the multimode input field that is believed to be desired by the wearer."

Glass could also work in a more passive way, reacting to the environment rather than to direct wearer instructions. "A person's name may be detected in speech during a wearer's conversation with a friend, and, if available, the contact information for this person may be displayed in the multimode input field" the application suggests, useful for social gatherings or business meetings, while "a data pattern in incoming audio data that is characteristic of car engine noise" – which could even potentially differentiate, from the audio characteristics, the user's own car – could trigger a navigation or mapping app based on the assumption that they're likely to be traveling somewhere.

Input from various sensors could be combined, too; Google's application gives the example of predicting what interface methods are most likely to be used based on the weather. For instance, while the touchpad or keypad might be the primary default, if Glass senses that the ambient temperature is so low as to suggest that the wearer has donned gloves, if could switch to prioritizing audio input using a microphone.

"For example, a wearer may say "select video" in order to output video from camera to the multimode input field. As another example, a wearer may say "launch" and then say an application name in order to launch the named application in the multimode input field. For instance, the wearer may say "launch word processor," "launch e-mail," or "launch web browser."" Google

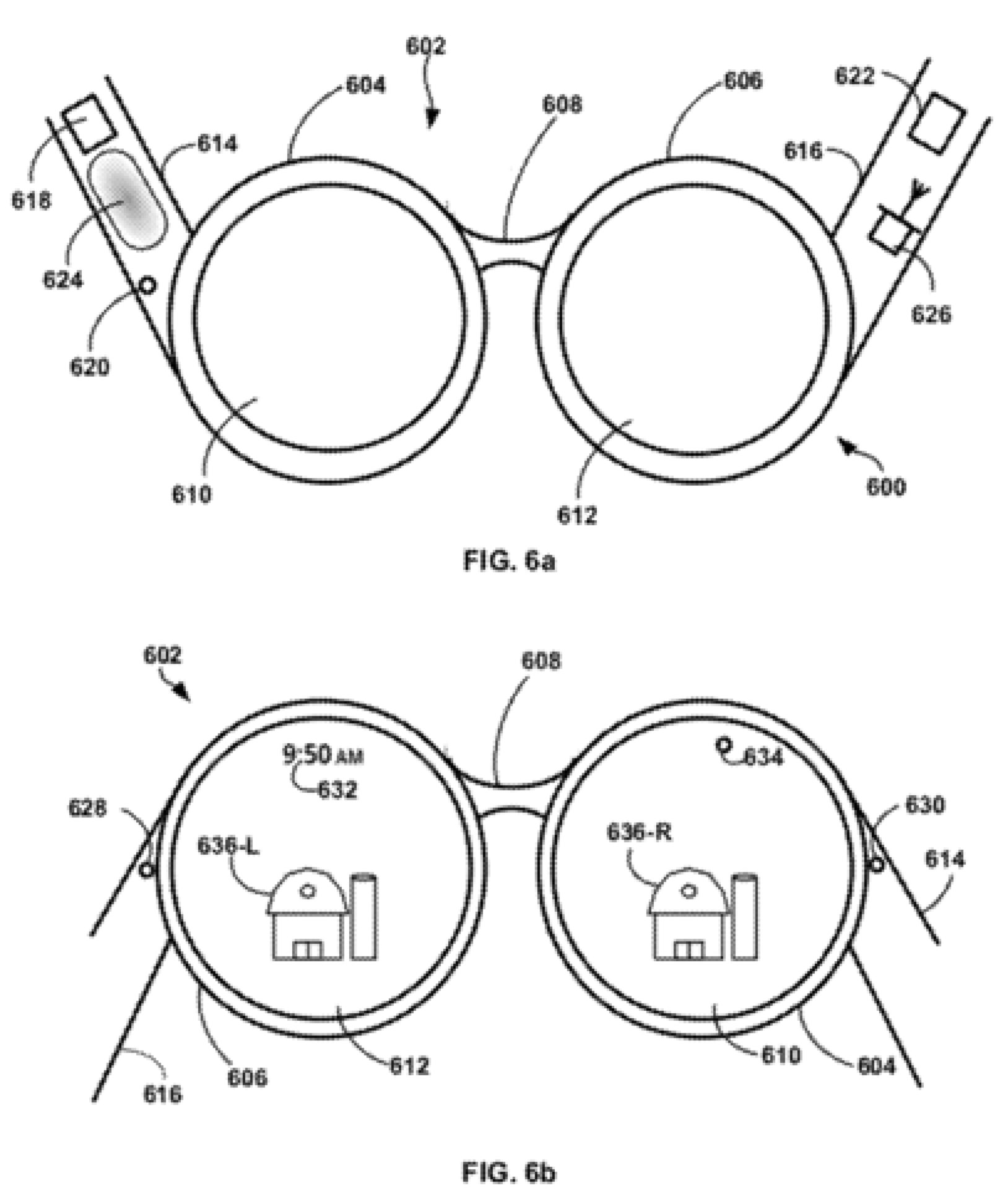

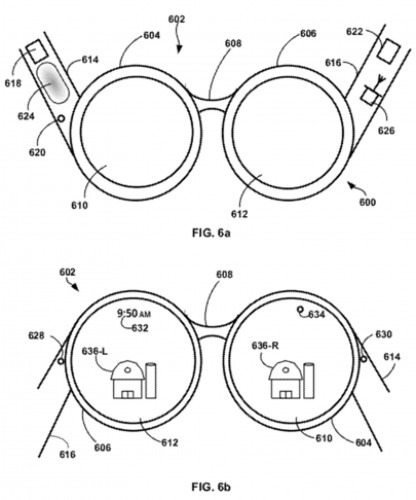

Although the prototype Glass headsets we've seen so far have all stuck to a similar design – an oversized right arm, with a trackpad, camera and single transparent display section hovering above the line-of-sight – Google isn't leaving any design possibility unexplored. Future Glass variants could, the patent application suggests, provide displays for one or both eyes, built into a pair of eyeglasses or attached as an add-on frame. The displays themselves could use LCD or other systems, or even a low-power laser that draws directly onto the wearer's retina.

Meanwhile more than one camera could be implemented – Google suggests integrating a second into the trackpad, so as to directly watch the user's fingers, but a rear-facing camera could be handy for "eyes in the back of your head" – and the headset could either be fully-integrated with its own processor, memory and other components for standalone use, or rely on a tethered control device such as a smartphone. The touchpad could have raised dots or other textures so as to be more easily navigated with a fingertip.

Google has already begun taking pre-orders for the Google Glass Explorer Edition, which will begin shipping to developers in early 2013. Regular customers should get a version – much cheaper than the $1,500 for the Explorer – within twelve months of that.