Facebook Rosetta AI Finds And Understands Text In Memes

Optical Character Recognition (OCR) is a commonly used technology to pull text from images, such as scanned pages, without the time consuming burden of manually transcribing. As great as the technology is, Facebook is seeking something better: the ability to pull text from images and understand the text. That's where the company's Rosetta machine learning system comes in.

Facebook explains why it needs to pull text from images, including the ability to read that content using screen readers to the visually impaired; the data is also useful for providing better photo search results. OCR technology is obviously limited for these purposes, as it provides the text but nothing more.

AI, on the other hand, is able to retrieve the text and then understand what it means. Facebook built its Rosetta AI as a large-scale machine learning system, the company explained in a recent post on its Code website.

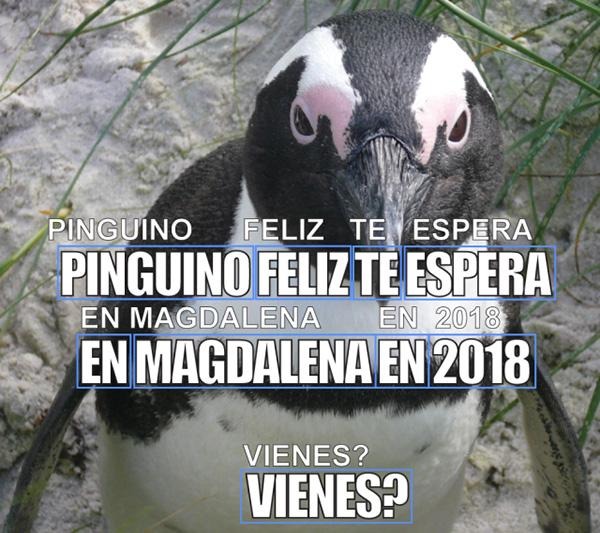

Using this system, Facebook is able to extract the text from an image — such as a meme — as well as from videos shared on Facebook and Instagram. The AI was trained using classifiers to perceive the context of the image/video based on both it and the text.

The system involves two steps, the first being the detection of regions that possibly contain text, and the second being text recognition using a convolutional neural network (CNN). Facebook has combined both the text detection and recognition into a simultaneous action using an approach based on an object detection network called Faster R-CNN.

Facebook goes into extensive detail on its website, including offering example images and graphs to complement its data. In conclusion, the company says it is also working on extending the text recognition system to other languages that don't feature the Latin alphabet data set.

With a unified model for a large number of languages, we run the risk of being mediocre for each language, which makes the problem challenging. Moreover, it's difficult to get human-annotated data for many of the languages. Although SynthText has been helpful as a way to bootstrap training, it's not yet a replacement for human-annotated data sets. We are therefore exploring ways to bridge the domain gap between our synthetic engine and real-world distribution of text on images.

SOURCE: Facebook