Facebook Messenger Is Scanning All The Links And Photos You Share [Updated]

Facebook is automatically scanning Facebook Messenger conversations for unacceptable content, the company has confirmed, monitoring both links and images shared. The revelation stemmed from an unexpected side detail from an interview with CEO Mark Zuckerberg.

Facebook has long used automated systems – with varying degrees of efficacy – to track what's publicly posted on users walls. However, less discussed is how the social network's systems track content shared in conversations users might realistically consider more private. Turns out, Facebook's watchdogs aren't far away.

Zuckerberg gave an example of how it works in a recent interview, where the system had spotted messages related to the ethnic cleansing in Myanmar. At the time, the chief exec said, the system was able to step in and block the transmission of the messages through Facebook's network.

The monitoring opens new questions about how Facebook handles privacy, a topic already high profile to begin with. The company has recently scaled up its privacy tools, giving more transparent control over the settings that handle visibility of data with third-party applications and other services. Partly that's in response to the incoming General Data Protection Regulation (GDPR), which will come into effect in Europe next month.

However, it's also partly because of revelations about how Facebook handled personal data and third-party apps some years back. With a since-tightened API, app-makers were able to see not only personal information about the specific users of their apps and services, but that of the individual's friends, despite not informing that greater group. A multi-million-person-strong cache of data was subsequently passed to Cambridge Analytica, a company which went on to work with the Trump campaign during the 2016 US presidential election.

Facebook confirmed to Bloomberg that it does, indeed, monitor conversations on Facebook Messenger. However it's automated tools doing the day to day analysis. Human moderators on the "community operations" team only get involved, it's suggested, when specific posts or messages are reported for violating Facebook's "community standards."

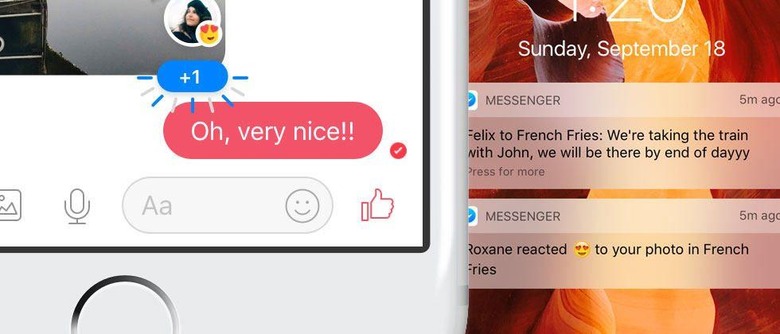

"For example, on Messenger, when you send a photo, our automated systems scan it using photo matching technology to detect known child exploitation imagery or when you send a link, we scan it for malware or viruses," a company spokesperson explained. "Facebook designed these automated tools so we can rapidly stop abusive behavior on our platform."

While the sharing of exploitative imagery or other illegal acts is one thing, the news is still likely to prompt concerns among rights activist nonetheless. The fact that it's Facebook deciding what's "acceptable" and what is not is, arguably, as controversial as automatic scanning in the first place.

Similarly unclear is what use Facebook makes of that automatically scanned information for other purposes. The technology, after all, could just as readily be used – even if be more morally ambiguous – to tailor advertising to topics users are discussing. Earlier this week, Zuckerberg declined to say what aspects of the GDPR the social site would roll out globally, beyond the legally-required minimums in Europe.

Update: Facebook says that Bloomberg mischaracterized Zuckerberg's comments when it suggested Messenger tracks text. In fact, the company tells us, the automated systems monitor links and images shared in Messenger conversations.

"Keeping your messages private is the priority for us, we protect the community with automated systems that detect things like known images of child exploitation and malware," a Facebook spokesperson told us. "This is not done by humans."