Revealed: Facebook's Secret Rules For Policing Your Content

Facebook has pulled back the curtain on its Internal Enforcement Guidelines, the long-mysterious rules by which its community standards team decides what's appropriate for the social network. Controversy around those rules has circulated for many years, with Facebook accused of giving little insight into the ways it decides which photos, videos, and posts should be removed, and which are "safe" to stay online.

"We decided to publish these internal guidelines for two reasons," Monika Bickert, Vice President of Global Policy Management at Facebook, said today. "First, the guidelines will help people understand where we draw the line on nuanced issues. Second, providing these details makes it easier for everyone, including experts in different fields, to give us feedback so that we can improve the guidelines – and the decisions we make – over time."

Facebook uses a combination of tech and human moderators in order to attempt to sift through what's shared on the site. Potentially problematic content is spotted either using artificial intelligence or reports from other users, the company says, and are passed on to the more than 7,500 human content reviewers. They work 24/7 in over 40 languages, Facebook says.

For example, Facebook says it won't allow hate speech about "protected characteristics." These include ace, ethnicity, national origin, religious affiliation, sexual orientation, sex, gender, gender identity, and serious disability or disease. However there are only "some protections" around immigration status, and there are three "tiers of severity" by which posts are judged.

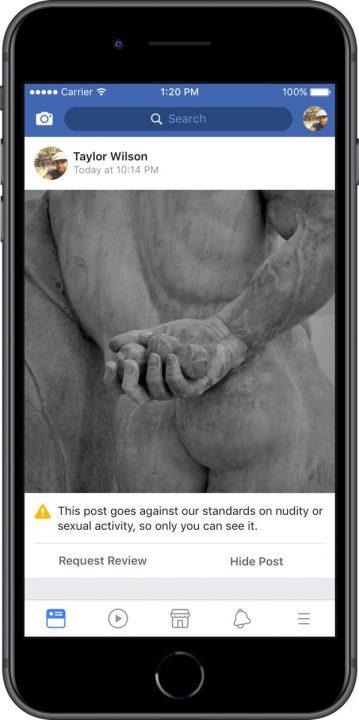

In "adult nudity and sexual activity" under "Objectionable Content," too, there are plenty of provisos. You can't, for example, show a fully nude close-up of somebody's buttocks – unless, that is, they've been "photoshopped on a public figure." Sexual activity in general is banned, unless "posted in a satirical or humorous context."

Aven with guidelines, then, the system can't be infallible. Indeed, there are thousands of words split across the multiple sections of the community standards guidance; expecting each individual team member to reach the same conclusion for every incident is impossible. With that in mind, Facebook has also added a new appeals process.

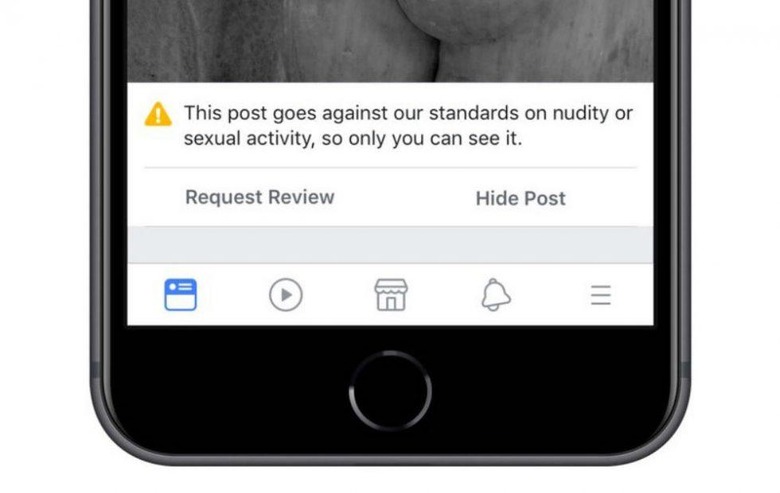

For the first time, there'll be the opportunity to appeal a decision by a content moderator, and get a second opinion. If some content of yours – whether photo, video, or text post – has been removed, you'll get a message explaining how it went against Facebook's standards. There'll be a link to request a review, which will be carried out by a person, and "typically within 24 hours," Facebook promises.

If the second moderator thinks differently, the post will be restored. "We are working to extend this process further, by supporting more violation types, giving people the opportunity to provide more context that could help us make the right decision, and making appeals available not just for content that was taken down, but also for content that was reported and left up," Facebook's Bickert says.

It's all part of Facebook's attempt to better control – and do so more transparently – what's going on across the site, particularly in the wake of controversies around its involvement in the 2016 US presidential election. Come May, Facebook Forums: Community Standards will debut, a series of public events that will take place in the US, UK, Germany, France, India, Singapore, and other locations. There, Bickert hopes to "get people's feedback directly."