Apple Maps Is Getting A Huge Reboot

Apple Maps is about to get a huge upgrade, with exponentially more data, crowdsourced updates, and potentially paving the way to future AR smart glasses projects. The mapping app hasn't exactly got the best reputation among navigation right now, though it has significantly improved since Apple first launched it in 2012 as part of iOS 6.

That launch was, fair to say, something of a disaster, and for many it cemented the idea of Apple Maps as being less accurate and more glitchy than rival systems like Google Maps. This year, Apple will finally loosen its reins on things like using third-party navigation apps in CarPlay, which will finally bring Google Maps and Waze – among others – to the dashboard when you plug in your iPhone running iOS 12. However, it's also counting on big Apple Maps improvements to many dissuade you from doing that.

Making your own maps

The key is that Apple is now taking map-making in-house. In an interview with TechCrunch, the company has explained how it plans to progressively shift from third-party mapping data to doing it all itself. It's a sign of just how important location data is, not only to simple navigation between A and B, but for localized services in apps and more.

You can't just build it once, however, and then expect it to stay the same. That requires mapping and re-mapping, as the data gets updated to reflect new road layouts, temporary changes, and more. According to Apple's Eddy Cue, SVP in charge of Maps, that'll be a lot easier to manage with this new architecture.

"For example, a road network is something that takes a much longer time to change currently," he said. "In the new map infrastructure, we can change that relatively quickly. If a new road opens up, immediately we can see that and make that change very, very quickly around it. It's much, much more rapid to do changes in the new map environment."

That has meant new hardware, specifically the growing fleet of sensor-toting vehicles with discrete Apple Maps branding that have been spotted trawling the streets over the past couple of years. They're Apple's fleet of roving cartographers, replicating the data collection that Google, HERE, and others are doing, but with a Cupertino twist.

The Apple Maps vans

Each of the vans has a whole host of camera and sensor equipment on top, feeding into a Mac Pro in the trunk. There are four LIDAR laser scanning arrays – one for each corner – and twice as many high-resolution cameras. A higher-accuracy GPS receiver and a physical distance measuring sensor are also included. The result is a combination 3D point cloud – from the LIDAR – and image database – from the cameras – from which Apple can build a real-time picture of the road network.

The onboard Mac Pro isn't just responsible for collating that data: it's also encrypting it, before it gets saved on a removable array of SSDs. That array gets shipped off to a data center, where personally identifiable information – like license plates and faces – are obscured. From that point on, Apple insists, nobody sees the raw data any more.

That's important for the core information Apple itself is gathering, but arguably even more so when you consider it's looking to iPhone users to help keep that data up to date. Like HERE before it, Apple is relying on so-called probe data – each mobile user supplying anonymized information as they go about their day – to highlight any mismatches between maps and the reality.

Crowdsourcing corrections

It's nothing more than direction and speed, and with no way to link it to an individual user's movements, but it's still potent stuff. If multiple people are walking along a route not represented on the existing maps, Apple could infer that there's a new pedestrian path there, and add it in. If you're in the car, Apple Maps could use probe data to estimate traffic levels in real-time, or even flag a whole new junction that's been added since its last full scan. Apple says that will add an infinitesimal amount of power consumption, since it'll only be happening when Maps is already active, and it'll only use a subset of data from your journey, not the whole route.

It'll be combined with human editors, who'll be able to make sure street signs have been correctly identified, as well as giving buildings a better 3D profile so figuring out your location visually will be easier. They'll also be responsible for making sure specifics – like building entry points and other details that often affect the very last portion of your trip – are as accurate as possible in the map.

Next-gen navigation potential

As TechCrunch's Matthew Panzarino points out – and as Apple declines to comment on – that sort of hyper-localized data, along with accurately identifying replicating road signs and such (down to font-matching) could be particularly useful if you're making, say, a set of Smart Glasses with an augmented reality navigation feature. According to previous rumors, Apple is already busy working on such technology, though we probably shouldn't hold our breath for an imminent launch.

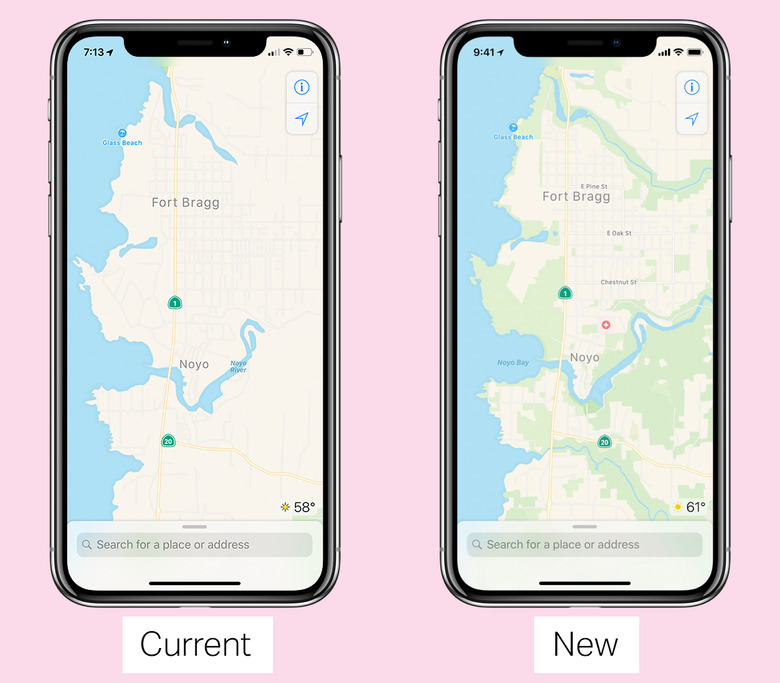

That's not the case for this new Apple Maps version. A preview will begin next week, though only for the Bay Area of California. It'll be integrated into the existing app and its data, with only the sudden uptick in detail showing where old and new meet. It'll continue to expand over the next year or so. No word on when, exactly, Apple expects it to be complete – indeed, a project like this is never actually finished – but it could mean serious competition to Google Maps sometime relatively soon.