AMD hUMA Wants To Speed Your APU Memory Use, No Joke

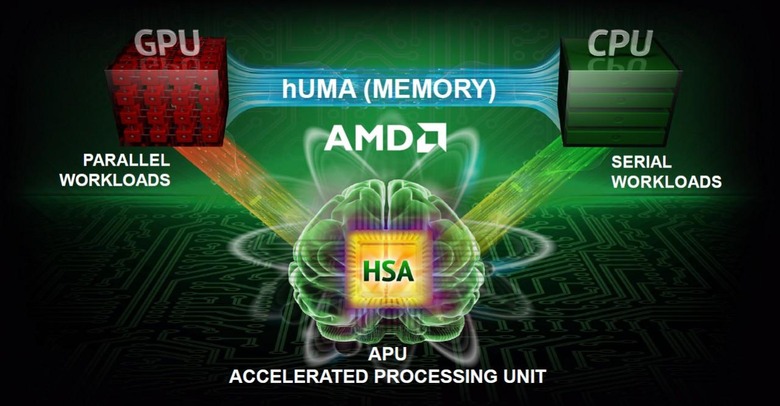

Heterogeneous Uniform Memory Access may sound like the orderly queue you make outside the RAM store, but for AMD, hUMA is an essential part of squeezing the best from its upcoming Kaveri APUs. Detailed for the first time today, hUMA builds on AMD's existing Heterogeneous System Architecture (HSA) – integrating CPUs and GPUs into single, multipurpose chips – by allowing both the core processor and the graphics side to simultaneously access the same memory at the same time.

That's important, since currently the CPU and GPU on an APU have to wait for time-consuming memory block management to take place in order for both parts to access it. If the GPU wants to see the same data that the CPU is seeing, that data has to be replicated in two places.

hUMA, however, would do away with that copying process, since it would make the memory visible to both CPU and GPU simultaneously. Called bi-directional coherent memory, it will mean less time involved for both halves of the APU to track data changes, as well as introduce efficiencies in memory management, since they'll have a better understanding and control over what free memory there is, and what they can use at any one time.

The upshot is systems that take less time for processing, as well as software that's easier to code since developers won't need to consider memory block management when they're trying to integrate GPU acceleration. Instead, that will all be handled dynamically by the hUMA system.

The first evidence of hUMA in the wild will be AMD's upcoming APU refresh, codenamed Kaveri. Revealed back at CES, full details on Kaveri are unknown, but the APU will be a 28nm chip and is tipped to include up to four of AMD's Steamroller cores, Radeon HD 7000 graphics, and a 128-bit memory controller with support for both DDR3 and GDDR5 memory.

AMD expects to have its Kaveri APUs on the market in the second half of 2013.